Same “Scraping”, Different Scopes

Information is more than just a resource – it is the foundation of competitive advantage. That’s why companies depend on accurate data to understand customers, forecast trends, and make strategic decisions.

But with information scattered across websites, databases, and documents, manual collection is slow, resource-intensive, and prone to error. This reality has elevated automation technologies from a convenience to a necessity.

By replacing repetitive manual tasks with intelligent extraction methods, companies can capture vast amounts of information with speed, precision, and consistency. Among the most prominent approaches, Data scraping vs. Web scraping stand out as key methods for transforming raw information into structured, analysis-ready datasets.

While Data scraping vs. Web scraping share the same core principle of automated data extraction, their scopes differ. Data scraping encompasses a wider range of sources, whereas web scraping focuses on retrieving and parsing content from web-based sources. Recognizing this distinction is crucial to avoiding the wrong tool choice, mitigating compliance risks, and optimizing operational efficiency.

The remainder of this blog will analyze and explore both methods from technical, operational, and governance perspectives.

Data Scraping vs. Web Scraping: What’s the Difference?

| Dimension | Data Scraping | Web Scraping |

| Scope | Broader category, includes websites plus databases, APIs, files (CSV, Excel), PDFs, emails, and more. | Subset of data scraping, focused only on extracting information from websites. |

| Data Sources | Structured (databases, CSVs, APIs) and unstructured (PDFs, scanned docs, emails, logs). | HTML, DOM elements, JavaScript-rendered pages, embedded JSON. |

| Methodology | Involves database queries, file parsing, API integrations, OCR for scanned docs, and ETL workflows. | Uses HTTP requests, selectors (CSS/XPath), or headless browsers to fetch and parse web content. |

| Reliability | More stable for structured sources; accuracy challenges arise mainly from unstructured inputs. | Fragile; easily disrupted by layout changes, CAPTCHAs, or bot-detection systems. |

| Primary Tools | Alteryx, Talend Data Integration, Apache NiFi, AWS Textract. | BeautifulSoup, Scrapy, Selenium, proxy services. |

| Use Cases | Data consolidation, reporting, migration, compliance monitoring, and enterprise analytics. | Competitive intelligence, price monitoring, content aggregation, and trend tracking. |

| Compliance | Often safer; typically applied to internal or permissioned sources with clearer governance. | Riskier; may conflict with Terms of Service or data protection laws. |

| Operational Effort | Higher initial setup (connectors, transformations), but lower ongoing fragility for structured data. | Lower startup cost, but high maintenance over time due to site changes. |

What is Data Scraping vs. Web Scraping?

Definition of Data Scraping:

Data scraping refers to the automated collection of information from a wide variety of digital sources, not limited to the web. While it can include website content, data scraping also targets documents, spreadsheets, PDFs, emails, APIs, system logs, and databases – transforming both structured and unstructured information into usable formats.

Data scraping has a broader scope, allowing organizations to extract data wherever it resides. This versatility makes it a powerful tool for consolidating scattered information across multiple systems and formats.

Businesses often rely on data scraping for document processing, database integration, financial reporting, compliance monitoring, and large-scale data migration. Automating extraction from diverse sources reduces manual workload and ensures faster, more consistent access to critical information.

Definition of Web Scraping:

Web scraping refers to the automated extraction of information from websites and its transformation into structured datasets. Instead of manually copying data, scraping tools collect and parse web content – such as HTML elements, DOM structures, or embedded JSON – so that it can be stored and analyzed in formats like CSV, JSON, or databases.

The practice typically focuses on publicly available web pages, including listings, product catalogs, search results, and sitemaps. In some cases, web scraping may be applied to authenticated areas where permission is granted, but it should always remain within the boundaries of website policies and legal compliance.

Companies adopt web scraping to gain competitive insights, monitor market trends, aggregate content, enhance SEO tracking, and enrich product catalogs. It becomes especially valuable when official APIs are unavailable, restricted, or insufficient, offering a practical way to turn unstructured online information into consistent, actionable data.

Operational Workflow of Data Scraping vs. Web Scraping

How Data Scraping Works

Step 1: Defining Data Sources and Objectives

The process begins by determining which sources to extract information from, such as databases, spreadsheets, PDFs, emails, APIs, or system logs. At this stage, businesses also define the purpose of the extraction – whether for financial reporting, compliance monitoring, data migration, or analytics – so that the workflow is aligned with specific goals.

Step 2: Accessing and Collecting Raw Data

Depending on the source, different methods are used to acquire raw data. This may involve direct database queries, connecting to APIs, reading structured files like CSVs, or applying OCR and parsing techniques to extract content from unstructured documents such as scanned PDFs. Security permissions and authentication are often required at this stage.

Step 3: Extracting and Standardizing Information

Once the raw data is collected, relevant fields are extracted according to a predefined schema. The information is then standardized, for example, by converting dates to a common format, aligning currencies, or applying consistent naming conventions. This step ensures the data is uniform and ready for integration.

Step 4: Storing and Integrating Data

The cleaned dataset is stored in a central repository such as a relational database, data warehouse, or cloud storage. From there, it can be integrated into existing enterprise systems, including ERP, CRM, or business intelligence platforms, ensuring seamless access for analysis and reporting.

Step 5: Monitoring and Updating Processes

Finally, the data scraping workflow requires ongoing monitoring and refinement. Automated checks and validations help detect missing or incorrect values, while scheduled updates ensure that data stays fresh and relevant. Over time, workflows may be optimized for speed, accuracy, and compliance with data governance policies.

How Web Scraping Works

Step 1: Identifying Targets and Requirements

The process starts by defining what information is needed and where it will come from. This involves selecting the websites to scrape and outlining a data schema that specifies which fields should be captured. At the same time, legal and ethical considerations must be reviewed, including website Terms of Service and robots.txt rules.

Step 2: Collecting Website Content

Once targets are defined, the scraper sends HTTP requests to retrieve the page content. For static websites, raw HTML can be accessed directly, while dynamic websites may require a headless browser to render JavaScript elements. This stage may also involve handling pagination, redirects, and region-specific settings such as language or currency.

Step 3: Extracting and Cleaning Data

After the content is collected, the next step is parsing and extracting the relevant information. Tools like CSS selectors, XPath, or JSONPath help locate and capture data fields. The extracted data is then cleaned by removing duplicates, normalizing formats, and validating against predefined rules to ensure accuracy and consistency.

Step 4: Storing and Delivering Data

Clean data is stored in databases, warehouses, or structured files such as CSV or JSON. From there, it can be integrated into business intelligence systems, dashboards, or machine learning pipelines. Depending on requirements, the data may be delivered via APIs, automated reports, or scheduled data feeds.

Step 5: Monitoring and Maintenance

Finally, scraping workflows require continuous monitoring to detect errors, layout changes, or blocked requests. Logging and alerting systems help track performance, while periodic updates to extraction rules ensure the pipeline remains reliable.

Data Scraping vs. Web Scraping: Advantages and Limitations

| Aspect | Data Scraping | Web Scraping |

| Advantages | Broad source coverage: Supports files, databases, APIs, emails, logs, and scanned documents – making it ideal for enterprise consolidation. Better predictability for structured sources: Extracting from CSV/DB/API is more stable and contract-driven than scraping UIs. Enables comprehensive ETL/ELT workflows: Facilitates normalization, validation, and lineage tracking for analytics and reporting. Regulatory and audit fit: When properly permissioned, file- and DB-based extraction maps well to governance and provenance requirements. | Access to otherwise unavailable data: Enables capture of information exposed only via a website UI (product listings, editorial content, public directories). Near-real-time feeds: Can provide frequent updates for time-sensitive use cases (price monitoring, news aggregation). Cost-effective for many use cases: Low initial tooling cost (libraries and frameworks) compared with licensing data or building integrations. Flexible data coverage: Can extract heterogeneous web artifacts (text, images, embedded JSON) across many domains. |

| Disadvantages | Complexity with unstructured inputs: Scanned PDFs and OCR-reliant documents require additional processing and error handling. Connector and credential management: Securely managing numerous source credentials and access controls increases operational overhead. Higher initial engineering effort: Building robust parsers, schema mappings, and transformation logic can be time-consuming. Potential data quality variability: Aggregating disparate schemas often reveals inconsistent or missing fields that need reconciliation. | Fragility to UI changes: Selectors and parsing logic break when site layouts or DOM structures change, requiring ongoing maintenance. Anti-bot & blocking risk: CAPTCHAs, rate limits, and IP bans necessitate mitigation (proxies, throttling) that increase complexity and cost. Legal and policy risk: Potential ToS violations or data-protection issues if not reviewed and managed. Resource intensity for dynamic content: Rendering JavaScript in headless browsers increases infrastructure and operational costs. |

Representative Use Cases

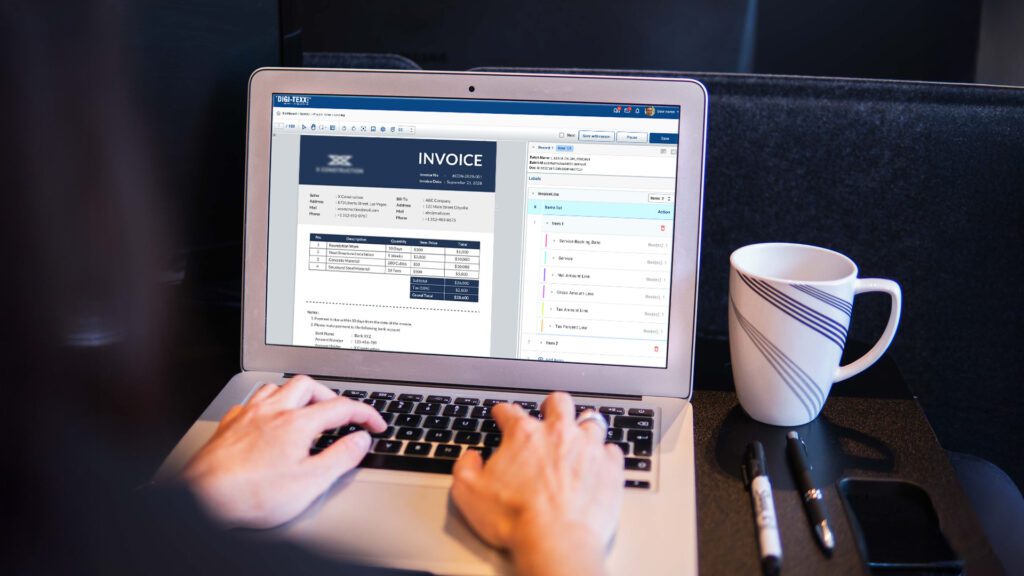

Common Use Case for Data Scraping: Automated Invoice Processing in Enterprise Finance

A prominent use case of data scraping lies in financial automation, particularly in handling invoices. In large enterprises, finance teams are often burdened with thousands of invoices arriving in varied formats – PDFs, scanned documents, or Excel sheets. Manual entry not only slows down operations but also introduces risks of human error and compliance issues.

Data scraping automates this process by extracting structured fields such as invoice numbers, vendor details, line items, payment amounts, and due dates. Using OCR combined with AI-based parsing, the technology can handle unstructured or semi-structured formats with high accuracy.

Extracted data is then validated and integrated into ERP or accounting systems automatically. For businesses, the impact is significant: faster invoice processing cycles, reduced manpower costs, higher accuracy in financial records, and improved compliance with tax and audit requirements.

This kind of automation also frees finance teams to focus on strategic tasks such as cash flow optimization and vendor relationship management, instead of repetitive data entry.

Common Use Case for Web Scraping: Online Historical Obituary Data Collection

One of the most compelling applications of web scraping is DIGI-TEXX’s solution for collecting historical obituary data. Traditionally, researchers, genealogy companies, and historical institutions had to manually search through archives, newspapers, and church records – a process that was both time-intensive and error-prone.

DIGI-TEXX has automated this workflow using advanced machine learning (ML) and natural language processing (NLP) technologies. The solution scrapes online sources such as school websites, parish records, and digital archives, extracting critical details including names, death dates, and even photographs.

With an accuracy rate of up to 95%, it can process over 450,000 records from a single URL, consolidating structured datasets in just 20-30 minutes for simple text-based entries or within a few days for more complex formats like scanned PDFs.

This capability has transformed data accessibility for clients ranging from genealogy platforms to AI developers. By providing reliable, large-scale datasets, DIGI-TEXX enables more accurate historical preservation, database enrichment, and the training of AI systems requiring high-quality demographic information.

Recommended Tools for Implementation

Top 5 Data Scraping Tools

| Tool | Definition | Origin | Core Features |

| Alteryx | End-to-end data analytics and preparation platform. | Commercial platform widely used in enterprise analytics. | Drag-and-drop workflows, connectors, advanced analytics, and reporting. |

| Talend Data Integration | Open-source & enterprise data integration platform. | Built for ETL, API, and cloud data pipelines. | 1,000+ connectors, batch & real-time processing, data governance. |

| UiPath Document Understanding | AI-powered Intelligent Document Processing (IDP). | Developed by UiPath, a global leader in RPA. | OCR, ML extraction, human validation, compliance-ready workflows. |

| AWS Textract | Cloud-native document extraction service. | Provided by Amazon Web Services. | OCR, table/field extraction, integrates with the AWS ecosystem. |

| Apache NiFi | Open-source data ingestion and flow automation tool. | Developed by the Apache Foundation for scalable, secure data flows. | Visual flow design, real-time streaming, provenance tracking, and strong security. |

Top 5 Web Scraping Tools

| Tool | Definition | Origin | Core Features |

| BeautifulSoup | Python library for parsing HTML/XML and navigating page structures. | Open-source, created in the early 2000s to simplify web data parsing for researchers and developers. | Easy-to-use API, DOM navigation, supports multiple parsers, and works well for small to medium-scale scraping. |

| Scrapy | A full-fledged Python framework for large-scale web crawling and scraping. | Open-source, designed for production-grade scrapers and scalable data collection. | Spiders, pipelines, asynchronous crawling, and built-in data storage support. |

| Selenium | Browser automation framework that can simulate user interaction with websites. | Originated in 2004 as a testing tool, later adapted for scraping dynamic content. | Renders JavaScript, handles forms, clicks, logins, and cookies; supports multiple browsers. |

| Octoparse | A commercial no-code scraping tool with a visual workflow builder. | Launched for non-technical users needing fast and scalable scraping solutions. | Drag-and-drop interface, scheduling, cloud-based extraction, proxy management. |

| Historical Data Web Scraping Solution | Specialized platform for archival and historical data extraction using ML and NLP. | Developed by DIGI-TEXX (Vietnam, global reach) to serve enterprises and institutions. | Crawls PDFs/HTML, extracts entities (names, dates, images) with ~95% accuracy, processes up to 450,000 records per URL. |

Determining the Appropriate Methodology

Deciding between Data scraping vs. Web scraping is ultimately about aligning technology with business objectives. While both methods revolve around extracting valuable information, they differ in scope, complexity, and application.

At its core, Data scraping serves as the broader discipline of automated data extraction, covering multiple formats such as documents, spreadsheets, PDFs, databases, and APIs. It provides organizations with a versatile foundation for system integration, compliance monitoring, reporting, and large-scale information management.

In a more specific scope, Web scraping emerges as a specialized technique dedicated to website sources. By focusing exclusively on websites, it enables businesses to capture market signals, customer sentiment, and digital content with precision.

In the end, the decision is about alignment: companies should select the methodology that matches their data sources, operational priorities, and strategic objectives, ensuring that information gathering is efficient, accurate, and fit for purpose.