BUSINESS CHALLENGES

Our Client

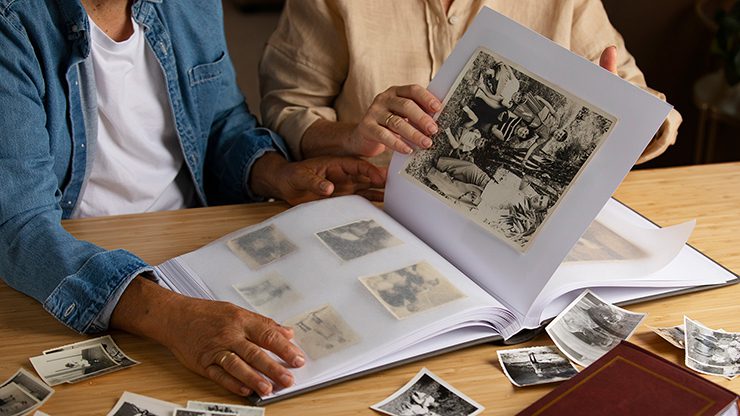

DIGI-TEXX’s client is the world’s leading provider of family history and genealogy services, with its main office located in the US. As part of the company’s 20 years of collecting, indexing, and digitizing efforts, they currently manage almost 7 billion records. These records include immigration, military service, marriages, and much more.

Project Challenges

Tracing The Historical Profiles

When it comes to researching genealogy, the obituary is a treasure trove of crucial data. Beyond basic biographical details like name, birth, and death dates, they provide insights into geographical locations, relatives’ names, and other important data that may be difficult to find in other historical sources.

Nevertheless, collecting and processing obituary data remains a challenging task for our clients due to several factors:

- Handling diverse and enormous data sources: A vast amount of historical obituary data is scattered across millions of digital resources from public newspapers, libraries, governments, churches, universities, and funeral home websites,…

- Manual Inefficiency: Manually extracting and indexing different formats, data types, and complex web structures is time-consuming and costly.

- Data Duplication: One piece of information can be stored on various resources, as a result, cleansing these data requires time and workforce.

- Data quality assurance: Ensuring data accuracy, completeness, and consistency can be challenging due to errors, unstructured data, and missing information

Project Scope

The project aims to develop a robust solution that assists clients in automatically collecting historical obituary data across digital resources. Then, the collected data will be standardized to ensure consistency and quality of information.

- Volume: 450,000 records per URL with 60 URLs/month

- Extracted fields in each record include a person’s name, gender images, birthplace, age, residence, date of death, location, cause of death,..

SOLUTION

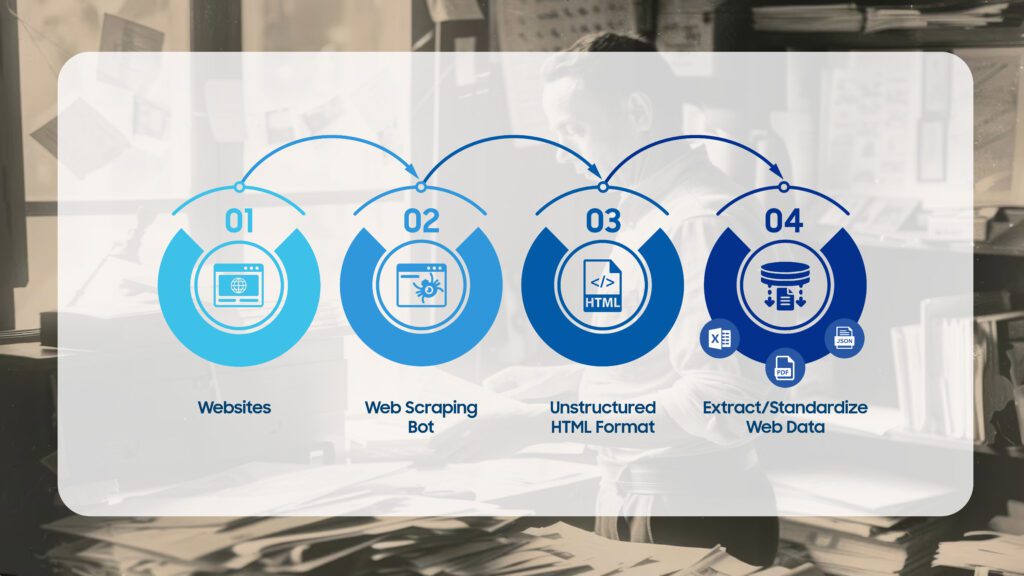

Historical Data Web Scraping Solution

To address these challenges, DIGI-TEXX developed a sophisticated web scraping solution to automate the process of collecting and processing historical obituary data across a large number of digital public newspaper archives and open-source sites. This would enhance the database, providing their users with access to millions of new records.

- Scraper Development: Our team has integrated machine learning algorithms and natural language processing (NLP) to develop a robust web scraper with the capacities of:

- Accessing public newspaper archives and online websites including schools, hospitals, churches, etc.

- Navigating through a vast array of sitemaps, sections, and search results

- Collecting the necessary data fields from a targeted website, including the person’s name, birthplace, age, date of death, residence, cause of death,…and images.

- Consolidating collected data into a central repository ensures one record per unique individual.

- Handling various data formats and structures (PDF, HTML, images)

- Data Validation: The collected data was cleaned, standardized, and formatted to match the Client’s database structure.

BUSINESS OUTCOME

- Optimized data processing time:

- 20-30 minutes for text-used URL

- 2-3 days for more complex URL

- Expanded Database: Delivered over 450,000 records per URL, significantly expanding the client’s database and providing users with access to millions of historical records.

- Improved Data Quality And Accuracy: Achieved an accuracy rate of 95%, ensuring reliable and accurate obituary data.

- Power Machine Learning and AI Applications: Create large datasets for training machine learning models to improve accuracy and performance