The ability to efficiently extract and harness information is paramount for organizations across various sectors. Data extraction – the process of retrieving structured or unstructured data from diverse sources – serves as the foundation for informed decision-making, strategic planning, and operational excellence. As businesses increasingly rely on data to gain competitive advantages, understanding the answer to “What is Data Extraction?” and “How does it work?” becomes essential for professionals and organizations alike.

The significance of data extraction is underscored by its robust market growth. (1) The global data extraction market was valued at approximately $2.14 billion in 2019 and is projected to reach around $4.90 billion by 2027, growing at a compound annual growth rate (CAGR) of 11.8% during the forecast period.

This expansion reflects the escalating demand for efficient data management solutions and the pivotal role of data extraction in modern business operations.

What is Data Extraction?

Data extraction is the process of pulling data from various sources (physical documents, PDFs, mailboxes, online blogs, social posts, etc.) for further processing or storage. It serves as the initial step in the data integration process, laying the groundwork for subsequent data transformation and loading phases. Understanding “What is Data Extraction?” and how it fits into the broader data lifecycle is essential for leveraging its full potential in real-world applications.

This procedure is integral to data warehousing, business intelligence, and analytics initiatives, enabling organizations to consolidate data from disparate sources into a unified repository for comprehensive analysis.

The significance of data extraction is emphasized by its central role in the Extract, Transform, Load (ETL) process, a critical component of data warehousing and integration strategies. ETL facilitates the consolidation of data from various sources into a centralized repository, enabling organizations to perform comprehensive analyses and derive actionable insights.

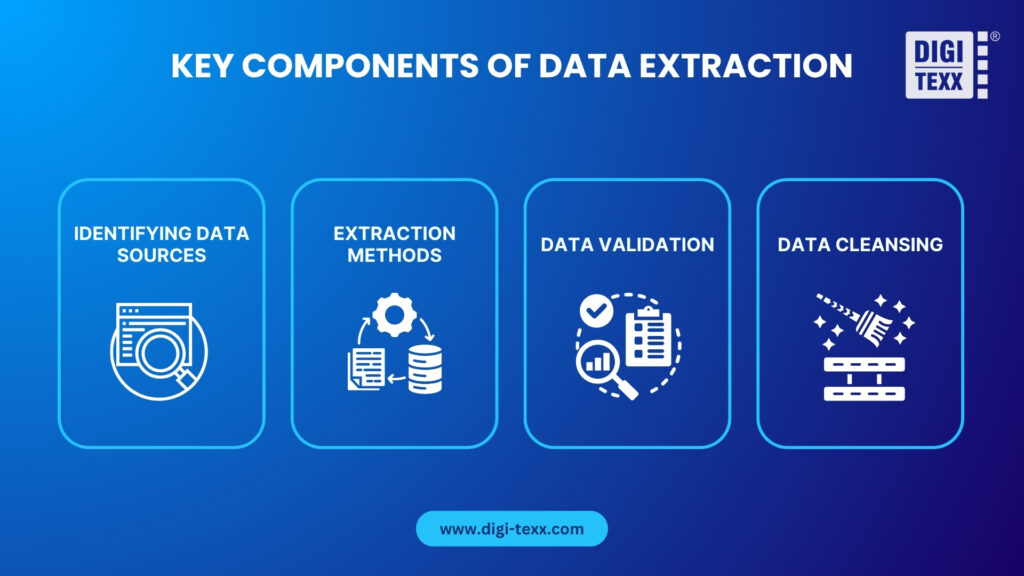

Key Components of Data Extraction:

- Identifying Data Sources: Data can originate from multiple sources, including relational databases, spreadsheets, web pages, APIs, and unstructured documents. Recognizing and cataloging these sources is crucial for effective extraction.

- Extraction Methods:

- Logical Extraction: Involves extracting data without significant transformation, suitable for homogeneous data environments.

- Physical Extraction: Entails extracting data with minimal or no transformation, often used when dealing with heterogeneous data sources.

- Data Validation: Ensuring the accuracy and consistency of extracted data is vital. This step involves verifying that the data conforms to expected formats and values, thereby maintaining data integrity.

- Data Cleansing: Post-extraction, data may require cleansing to rectify inconsistencies, remove duplicates, and handle missing values, ensuring the data’s reliability for analysis.

As organizations continue to recognize the value of data-driven decision-making, the importance of efficient and accurate data extraction processes cannot be overstated. Implementing robust data extraction methodologies ensures that businesses can harness the full potential of their data assets, driving innovation and maintaining a competitive edge in the market.

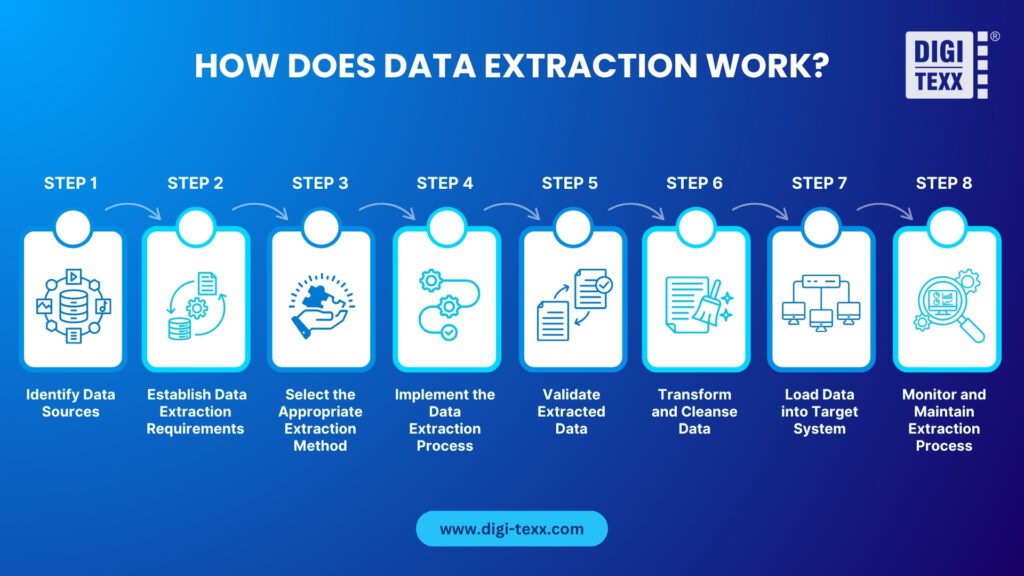

How Does Data Extraction Work?

After answering the question “What is Data Extraction”, it’s important to explore how the process actually works. Data extraction is a systematic process that involves retrieving data from various sources to prepare it for further analysis or storage. Here’s a step-by-step breakdown of how data extraction works:

Step 1: Identify Data Sources

Understanding the nature of the data source helps determine the best extraction method and tools required for the process.

Before extraction begins, it’s crucial to determine where the data resides. Data sources can be classified into three main categories:

- Structured Sources: These include relational databases (SQL, Oracle, PostgreSQL), spreadsheets (Excel, Google Sheets), and cloud-based data warehouses. Structured data is highly organized and follows a predefined schema, making extraction relatively straightforward.

- Unstructured Sources: This refers to data stored in non-tabular formats such as PDFs, emails, scanned documents, images, and web pages. Since this data lacks a defined format, advanced extraction techniques such as Optical Character Recognition (OCR) and Natural Language Processing (NLP) are often used.

- Semi-Structured Sources: Examples include XML, JSON, and NoSQL databases (MongoDB, Cassandra). While not as rigidly structured as SQL databases, these sources still contain organizational elements like tags or key-value pairs that can facilitate extraction.

| Data Type | Characteristics |

| Structured | Highly organized, stored in tables/databases, easily searchable |

| Semi-Structured | Contains elements of both structured and unstructured data, uses tags or metadata |

| Unstructured | No fixed format, difficult to process without AI/ML tools |

Example of Common Sources of Data:

| Structured Sources | Semi-Structured Sources | Unstructured Sources |

| – Databases: Relational Databases (RDBMS), NoSQL Database, Cloud Databases – Enterprise Applications: ERP (Enterprise Resource Planning) Systems, CRM (Customer Relationship Management) Systems, HR & Payroll Systems – Financial & Market Data: Stock Market & Trading Data, Cryptocurrency Data, Banking Transactions – Sensor & IoT Data (when stored in structured databases): Data from industrial sensors, GPS tracking systems, smart meters, etc. | – Web Data (APIs & JSON/XML Files): Data from RESTful APIs, social media APIs (Twitter, LinkedIn), typically formatted in JSON (JavaScript Object Notation) or XML (Extensible Markup Language)NoSQL Databases: MongoDB (document-based), Cassandra (wide-column store), Redis (key-value store) – Emails & Chat Logs: Contain structured metadata (e.g., sender, recipient, timestamp) and unstructured message content – Geospatial & Mapping Data: GIS data from Google Maps API, satellite imagery metadata, often stored in GeoJSON or KML (Keyhole Markup Language) – IoT & Sensor Data (when stored in non-relational databases): Log files from smart home devices, health tracking apps, connected cars – Financial Transactions in Logs: Transaction logs in banking applications, cryptocurrency ledgers like blockchain | – Text Documents & PDFs: Business reports, contracts, research papers, invoicesWeb Data (HTML Pages, Web Scraping): Web pages contain unstructured content that needs parsing for data extraction – Social Media Content: Posts, comments, reviews, images, and videos from platforms like Twitter, Instagram, Facebook – Multimedia Files (Images, Videos, Audio): CCTV footage, product images, podcasts, customer service call recordings – Scanned Documents & Handwritten Notes: Extracted using OCR (Optical Character Recognition) – Medical Records (when stored in free-text format): Doctors’ notes, radiology images, pathology reports – Customer Feedback & Reviews: Survey responses, online reviews, support ticket messages |

Step 2: Establish Data Extraction Requirements

After identifying the sources, the next step is to define the scope and objectives of the extraction process. This includes:

- Defining Objectives: Clearly outline what data needs to be extracted and why. For example, an e-commerce company may want to extract customer purchase history to improve personalization.

- Determining Extraction Frequency: Depending on business needs, data extraction can occur in real-time (continuous updates), on a scheduled basis (daily, weekly, monthly), or on an ad-hoc basis (one-time extraction).

- Compliance & Security Considerations: If dealing with sensitive information (financial data, healthcare records), ensure compliance with regulations such as GDPR (General Data Protection Regulation) or CCPA (California Consumer Privacy Act) to avoid legal risks.

Step 3: Select the Appropriate Extraction Method

Choosing the right method depends on factors like data volume, format, and processing requirements. The choice of extraction method depends on the type of data source and the complexity of the extraction process. The two main methods are:

- Logical Extraction – Used when the source system is accessible and structured. This method extracts data directly without making physical changes. It includes:

- Full Extraction – Pulls entire datasets at once, useful for initial data migration.

- Incremental Extraction – Extracts only the newly added or modified records, minimizing processing time and system load.

- Physical Extraction – Applied when direct access to the data source is not available. Methods include:

- Web Scraping – Extracting information from web pages using automated tools such as BeautifulSoup, Scrapy, or Selenium.

- OCR (Optical Character Recognition) – Converting scanned documents or images into text-based data.

- ETL (Extract, Transform, Load) Pipelines – Using ETL tools like Apache NiFi, Talend, or Informatica to automate data extraction.

Step 4: Implement the Data Extraction Process

Proper implementation ensures that extracted data is reliable and usable for further analysis. Once the method is chosen, the actual extraction process begins. This step involves:

- Connecting to Data Sources: For databases, this means writing SQL queries (e.g., SELECT * FROM customers). For APIs, this involves sending HTTP requests to retrieve JSON/XML data.

- Automating Extraction (if applicable): Organizations with large-scale data needs often use RPA (Robotic Process Automation) or Python-based scripts to automate extraction.

- Ensuring Data Consistency: Data should be extracted in a way that maintains its structure, avoiding incomplete or corrupted datasets.

Step 5: Validate Extracted Data

This step is crucial to prevent data inconsistencies that could lead to inaccurate business insights. Extracted data must be checked for accuracy and completeness before moving to the next stage. Key validation steps include:

- Data Completeness Check: Ensuring all required fields are extracted (e.g., customer records should include name, email, and phone number).

- Data Consistency Check: Verifying that extracted data matches its source.

- Error Handling & Logging: Identifying and correcting issues like missing values, duplicate records, or formatting errors.

Step 6: Transform and Cleanse Data

Transforming and cleansing data ensures that the data is ready for meaningful analysis and decision-making. Before extracted data can be used, it often needs to be transformed and cleaned. This step involves:

- Data Normalization: Standardizing formats (e.g., converting dates into a uniform format like YYYY-MM-DD).

- Removing Duplicates: Eliminating redundant records to ensure data integrity.

- Handling Missing Values: Using techniques such as imputation (filling missing values with averages) or deletion (removing incomplete records).

- Data Enrichment: Merging extracted data with external datasets to enhance insights (e.g., integrating weather data with sales data).

Step 7: Load Data into Target System

Loading data into the target system ensures that the extracted data is accessible and ready for further use. After transformation, the data is loaded into its destination, which could be:

- A Data Warehouse (e.g., Amazon Redshift, Google BigQuery, Snowflake) – Used for analytics and reporting.

- A Data Lake (e.g., Apache Hadoop, Azure Data Lake) – Ideal for storing large volumes of raw, unstructured data.

- Business Intelligence (BI) Tools (e.g., Tableau, Power BI) – Used for visualization and insights generation.

- Operational Databases (e.g., MySQL, PostgreSQL) – If data needs to be integrated into day-to-day business operations.

Step 8: Monitor and Maintain Extraction Process

Data extraction is not a one-time process; it requires continuous monitoring and optimization. This involves:

- Performance Monitoring: Tracking extraction times and system performance to ensure efficiency.

- Data Quality Audits: Periodically reviewing extracted data to ensure it remains accurate and relevant.

- Updating Extraction Logic: Adjusting methods as data sources change (e.g., a website updates its HTML structure, requiring modifications to web scraping scripts).

- Security & Compliance Checks: Ensuring ongoing adherence to GDPR, HIPAA, or SOC 2 regulations.

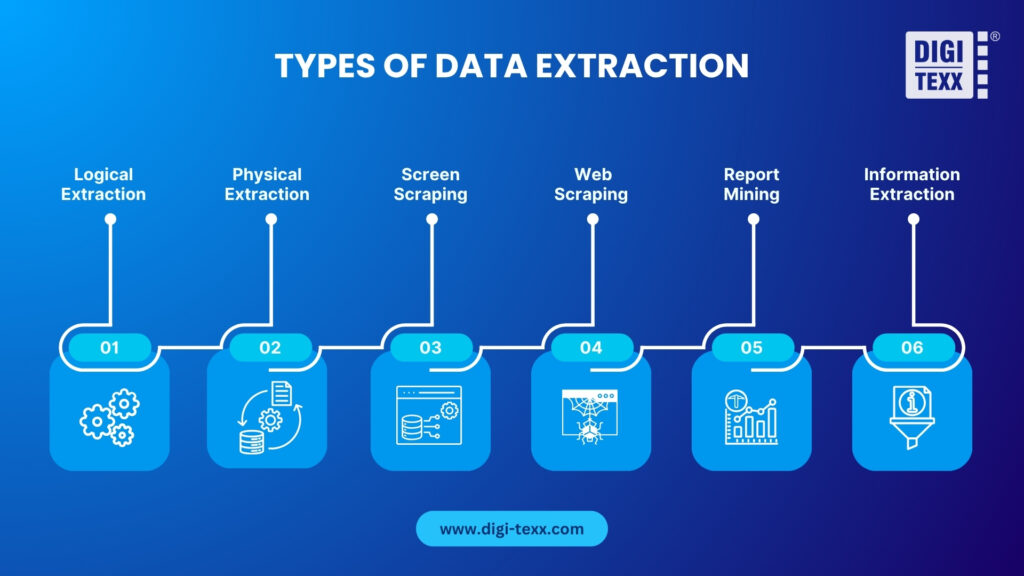

Types of Data Extraction

To better understand “What is Data Extraction”, it’s helpful to know that the process can be categorized into six primary styles.

1. Logical Extraction

Logical extraction involves retrieving data without making significant changes to its structure or format. This method is particularly useful when dealing with structured data sources where the data is organized in a predefined manner. Logical extraction can be categorized into two main approaches:

- Full Extraction: In this approach, the entire dataset is extracted in one go. It is often used during initial data migrations or when a comprehensive snapshot of the data is required. While straightforward, full extraction can be time-consuming and resource-intensive, especially with large datasets.

- Incremental Extraction: This method focuses on extracting only the data that has changed since the last extraction. By identifying and retrieving new or updated records, incremental extraction reduces processing time and system load, making it more efficient for ongoing data integration tasks.

2. Physical Extraction

Physical extraction involves copying data at the storage level, often without interacting directly with the source application. This method is typically employed when direct access to the data source is limited or when dealing with large volumes of data. Physical extraction can be performed using techniques such as:

- Direct Database Access: Extracting data by connecting directly to the database storage files, bypassing the application layer. This approach requires in-depth knowledge of the database architecture and is often used in disaster recovery scenarios.

3. Screen Scraping

Screen scraping refers to the process of capturing data displayed on a screen, typically from legacy systems or applications that do not provide direct data access. This method involves programmatically reading the visual output of an application and translating it into a structured format for further use. Screen scraping is often considered a last resort due to its complexity and potential fragility, as changes in the user interface can disrupt the extraction process.

4. Web Scraping

Web scraping is the technique of extracting data from websites by parsing the HTML content of web pages. This method is widely used to gather information from the internet, such as product prices, news articles, or social media content. Web scraping tools simulate human interaction with web pages, navigating through links and extracting relevant data for analysis. However, it’s important to consider the legal and ethical implications of web scraping, as some websites prohibit automated data extraction in their terms of service.

5. Report Mining

Report mining involves extracting data from human-readable reports, such as PDFs, HTML files, or text documents. This method is useful when direct access to the underlying data is not available, and the information is only accessible through formatted reports. Report mining tools parse these documents to retrieve structured data, enabling further analysis without requiring changes to the original reporting system.

6. Information Extraction

Information extraction (IE) is a process that automatically extracts structured information from unstructured or semi-structured text. This method utilizes natural language processing (NLP) techniques to identify and categorize entities, relationships, and events within textual data. IE is commonly used in applications such as:

- Named Entity Recognition (NER): Identifying and classifying proper nouns in text, such as names of people, organizations, or locations (e.g., extracting names or ID from employee documents)

Relationship Extraction: Determining the relationships between identified entities, such as connecting a candidate to past employers - Event Extraction: Detecting specific events mentioned in text, such as transactions, meetings, or incidents.

For example, in the employee onboarding process, applying IE has reduced the processing time per document from 3 minutes to just 5 seconds, while increasing accuracy from 60% (manual entry) to 97% through automation, demonstrating that information extraction is particularly valuable in processing large volumes of textual data and enabling the transformation of unstructured content into structured datasets for analysis.

Techniques such as text mining and web scraping have gained prominence. Text mining involves analyzing text to extract valuable information, employing methods like information retrieval and natural language processing. Web scraping, on the other hand, focuses on extracting data from websites, converting unstructured web data into structured formats for analysis.

Methods for Data Extraction

Manual Data Extraction & Automated Data Extraction

| Manual Data Extraction | Automated Data Extraction |

| Involves human effort in copying and pasting data from sources like documents, spreadsheets, or websites. Best for: Small-scale, one-time data extraction tasks. Challenges: Time-consuming, prone to errors, and not scalable. | Uses scripts, software, or AI-based tools to extract data from structured, semi-structured, and unstructured sources. Best for: Large-scale, repetitive extraction tasks. Challenges: Requires technical knowledge, may need ongoing maintenance. |

Popular Data Extraction Tools

There are many tools available for data extraction, ranging from open-source libraries to enterprise-grade platforms.

Database Extraction Tools

| Tool | Description |

| Talend | Open-source ETL tool for structured data extraction. |

| IBM InfoSphere | Enterprise-grade data integration and extraction tool. |

| SQL Server Integration Services (SSIS) | Microsoft tool for data extraction from SQL Server. |

Web Scraping Tools

| Tool | Description |

| BeautifulSoup | Python library for parsing and extracting data from HTML and XML. |

| Scrapy | Framework for large-scale web scraping and data mining. |

| Selenium | Automates browser interactions to scrape dynamic web pages. |

API-Based Data Extraction Tools

| Tool | Description |

| Postman | API testing tool that allows users to extract data from APIs. |

| RapidAPI | Marketplace for finding and integrating APIs. |

| Google Cloud Dataflow | Extracts and processes data from Google APIs and cloud sources. |

OCR & Document Data Extraction Tools

| Tool | Description |

| Tesseract OCR | Open-source tool for extracting text from images. |

| Adobe Acrobat | Extracts data from scanned PDFs. |

| Google Cloud Vision API | An AI-powered tool for extracting text and information from images. |

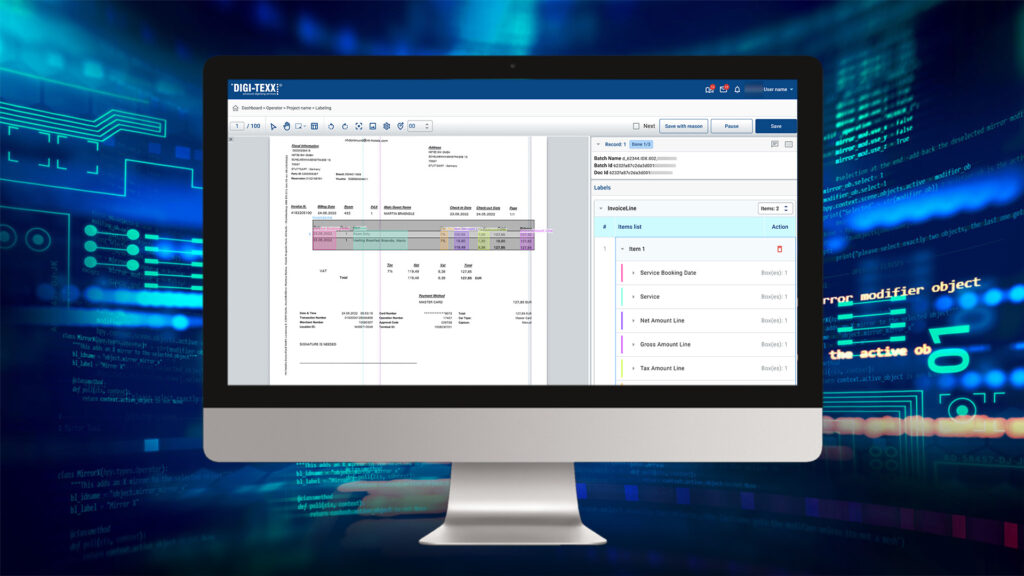

| DIGI-XTRACT | Data extraction solution built by DIGI-TEXX VIETNAM that can eliminate the need for human intervention |

Enterprise Data Extraction & ETL Tools

| Tool | Description |

| Apache Nifi | Open-source ETL tool for data movement and extraction. |

| Informatica PowerCenter | Enterprise-grade data integration and extraction platform. |

| AWS Glue | Cloud-based ETL service for structured and semi-structured data extraction. |

Unlocking the Power of Data: The Key Role of Efficient Data Extraction

What is Data Extraction? It is a fundamental process that enables organizations to collect, analyze, and utilize valuable information from various sources. Whether extracting structured data from databases, semi-structured data from APIs and logs, or unstructured data from documents and web pages, businesses rely on different tools and methods to streamline this process.

Choosing the right extraction technique, like manual, automated, API-based, web scraping, or OCR, depends on the data format, volume, and business needs. Tools like SQL for databases, Scrapy for web scraping, Tesseract for OCR, and enterprise ETL solutions like Talend and AWS Glue help automate data extraction at scale.

As businesses increasingly rely on big data, AI, and real-time analytics, efficient data extraction will play a key role in driving smarter decisions, enhancing automation, and gaining competitive advantages. Investing in the right tools and technologies ensures data accuracy, efficiency, and compliance, ultimately empowering organizations to unlock the full potential of their data.