Handwritten documents hold a significant role in depicting the cultural and historical value of a civilization. For the preservation of this wealth of information, digitizing handwritten historical documents in multilingual scripts into digital assets is imperative.

It is observed that modern technology development has a significant influence on facilitating the capability of capturing and achieving old documents. However, the diversity and complexity of old records in various languages such as old German, Fraktur, Sanskrit, Hebrew, and old Greek still cause difficulty in automatically digitizing them.

Understanding The Process of Digitizing Handwritten Historical Documents

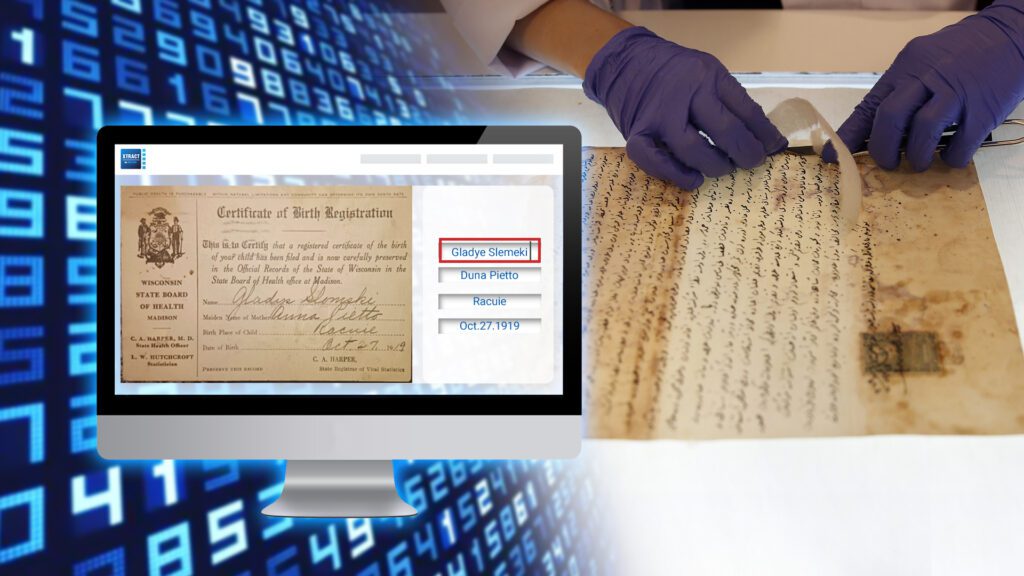

In order to extract a wealth of information stored in old documents, the important step is to transcribe handwritten text into a machine-readable format. The traditional process of digitizing handwritten text includes classical image processing approaches and manual data entry work.

As recognition of handwritten text has become one of the most important fields of pattern recognition in recent years, many researchers have proposed techniques to facilitate the capacity of transcribing historical archives.

Optical Character Recognition (OCR) represents the principal technology for capturing and extracting handwritten writings. It commonly deploys in two main steps:

- First, it finds all the text in the image, like cutting out puzzle pieces

- Then, it figures out what each piece says and puts it all together into a machine-readable format

With the development of machine learning algorithms and OCR technology, many Handwritten Character Recognition (HCR) systems like DIGI-XTRACT can analyze document layouts and recognize letters, text lines, paragraphs, and whole documents.

Converting handwritten texts to digital assets

Discover Difficulties in Digitizing Handwritten Historical Documents in Multilingual Scripts

Nevertheless, as a result of their unique characteristics, such as writing style variations, overlapped characters and words, and marginal annotations, digitizing these handwritten historical documents is still a challenging mission for researchers.

Variations of Script

Each language differs in the shape of its letters and the way it connects its words. Identifying handwritten text can be challenging due to many impediments. Various writing styles are, in fact, one of the most challenging factors holding back the digitization process of these valuable documents.

Besides, variations in letterforms and styles can confuse even the most advanced OCR engines trained on modern, standardized fonts.

Imagine trying to read a document written in a font that constantly morphs between Comic Sans and Times New Roman – that’s the challenge of script variations. Over time, writing styles evolve.

Here are some common examples of the complexity in handwriting style:

- Blackletter and Italic: Medieval European documents often used Blackletter, a spiky script with intricate flourishes. Modern OCR struggles with these flourishes, mistaking them for parts of letters. Italic script, another historical favorite, can also be misinterpreted due to its slanted nature.

- Fraktur is a specific kind of Blackletter that became very popular in Germany. This writing style is used for writing German from the late Middle Ages (around the 15th century) until the mid-20th century (1941)[1]. This writing style is confusing to differentiate from the German modern writing style.

Advanced OCR engines can be trained on specific historical script variations. However, these require large datasets of labeled historical documents, which are often scarce for less common scripts.

Multilingual Recognition

Reading a document containing multiple languages is like playing a puzzle with missing pieces for OCR.

The following examples are the two main reasons showing the difficulty in recognizing multilingual documents:

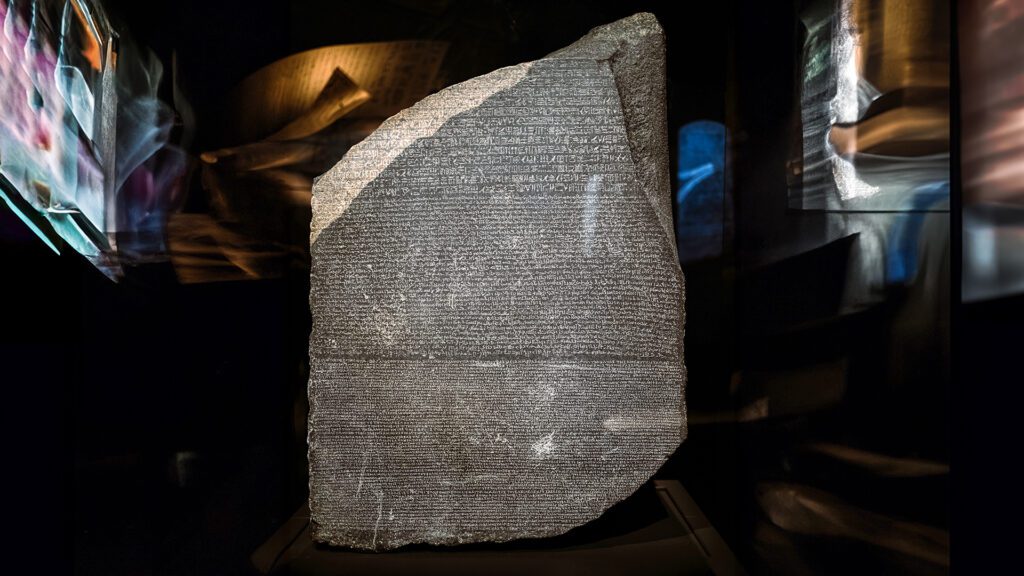

- Script Differentiation: Take the Rosetta Stone as an example. This famous stone, dating back to 196 BC, is inscribed with a decree in three different scripts: Ancient Egyptian hieroglyphs, Demotic script (used for everyday writing in ancient Egypt), and Ancient Greek.

- Mixed Languages: Documents with words from different languages within a sentence create a real challenge. OCR might struggle to segment the text correctly, leading to nonsensical combinations.

A historical document from a multilingual region might combine different languages in a single sentence. OCR or HCR systems have limited capacity to understand the context and separate the languages for accurate recognition.

Researchers are developing multilingual OCR engines that can identify different scripts within a document and apply appropriate recognition models. However, these models still require significant improvement, especially for less common languages.

Degraded Document Quality

Time takes a toll on everything, including historical documents. And the degradation of physical documents makes digitization more challenging, for example:

- Faded Ink: Over time, documents like letters, records, or books written with ink can fade, making it difficult for OCR software to distinguish the characters from the background. This can lead to missing information and errors.

- Tears and Stains: Physical damage to documents can create gaps and distortions that confuse OCR software. It might misinterpret these as actual characters or struggle to recognize text in those areas.

A faded document with a crucial inheritance clause might be misread if the ink containing the specific beneficiary’s name has faded significantly.

Image pre-processing techniques or Image quality enhancement technology can be used to enhance the scanned images by improving contrast and reducing noise.

However, in some cases, manual intervention by historical document specialists might be needed to decipher faded or damaged sections.

Lack of Training Data

Machine learning, the backbone of modern OCR, thrives on data. Therefore, the scarcity of data hinders historical document digitization in different ways:

- Rare Scripts: Less common historical scripts may need a larger corpus of digitized and labeled documents for training OCR or HCR engines. This leads to inaccurate recognition of those scripts.

- Dialect Variations: Historical documents might use regional dialects with specific spellings or character variations. Without training data specific to those dialects, the OCR or HCR engine struggles to recognize them correctly.

When digitizing a document written in a regional dialect of Arabic, a standard Arabic OCR engine trained on modern, standardized text might not recognize the dialectal variations, leading to significant errors.

Researchers are exploring ways to leverage transfer learning techniques, where models trained on one script can be adapted to related historical scripts. This can help address the scarcity of training data for specific languages.

Contextual Understanding

Historical documents often go beyond simple text. In order to transcribe these vital documents accurately, it is necessary to comprehend the nuances of language, the context of the document when it is first published, the regional culture of the document’s birthplace, etc.

Some examples showing the importance of contextual understanding of the historical documents:

- Abbreviations: Historical documents are packed full of abbreviations specific to a period or profession. OCR doesn’t understand the context and might misinterpret them. For example: A medical document might use an abbreviation like “Bx” for biopsy. OCR wouldn’t understand this without prior knowledge of medical terminology from that era.

- Symbols and Shorthand: Scribes employed various symbols and shorthand notations to save space. OCR cannot decipher these symbols without additional information about their meaning.

Advanced OCR models are being developed to incorporate contextual information from dictionaries and historical glossaries. By doing so, they will be able to recognize abbreviations and symbols correctly.

Facilitate The Process of Digitizing Handwritten Historical Documents in Multilingual Scripts

As mentioned above, Machine Learning is one of the key techniques to enhance the capacities of the OCR or HCR systems.

For these engines to digitize historical documents properly, the quality of the pre-processed images is one of the top priorities to consider. The majority of the documents were printed or written on ordinary paper years ago, which has a limited lifespan and decomposes over time.

In most cases, the captured images of these documents are in poor condition (bad light and color, incorrect angles, and so on).

Image Quality Enhancement is an exceptional technique applied in the pre-processing step to transform the images and make them more suitable for machine vision algorithms in later processing stages.

Non-Stop Development To Preserve The Valuable Heritage From Our Past

Digitizing historical documents in different languages is a challenging endeavor on the path to digital preservation, script variations, multilingual recognition, deteriorated document quality, and lack of training data all present obstacles.

Researchers, however, are always innovating. Through collaborating with technology partners, researchers can utilize ready-to-use document and data processing techniques to facilitate the study process and gain valuable insight into the secrets of these historical treasures.

The future of historical document digitization is bright, promising wider accessibility and a deeper understanding of our past.

| Read more: