Data is the backbone of business success. Companies across industries rely on real-time insights to drive decision-making, optimize operations, and stay ahead of the competition. But with massive amounts of data scattered across websites, databases, and documents, how can businesses efficiently collect and utilize this information?

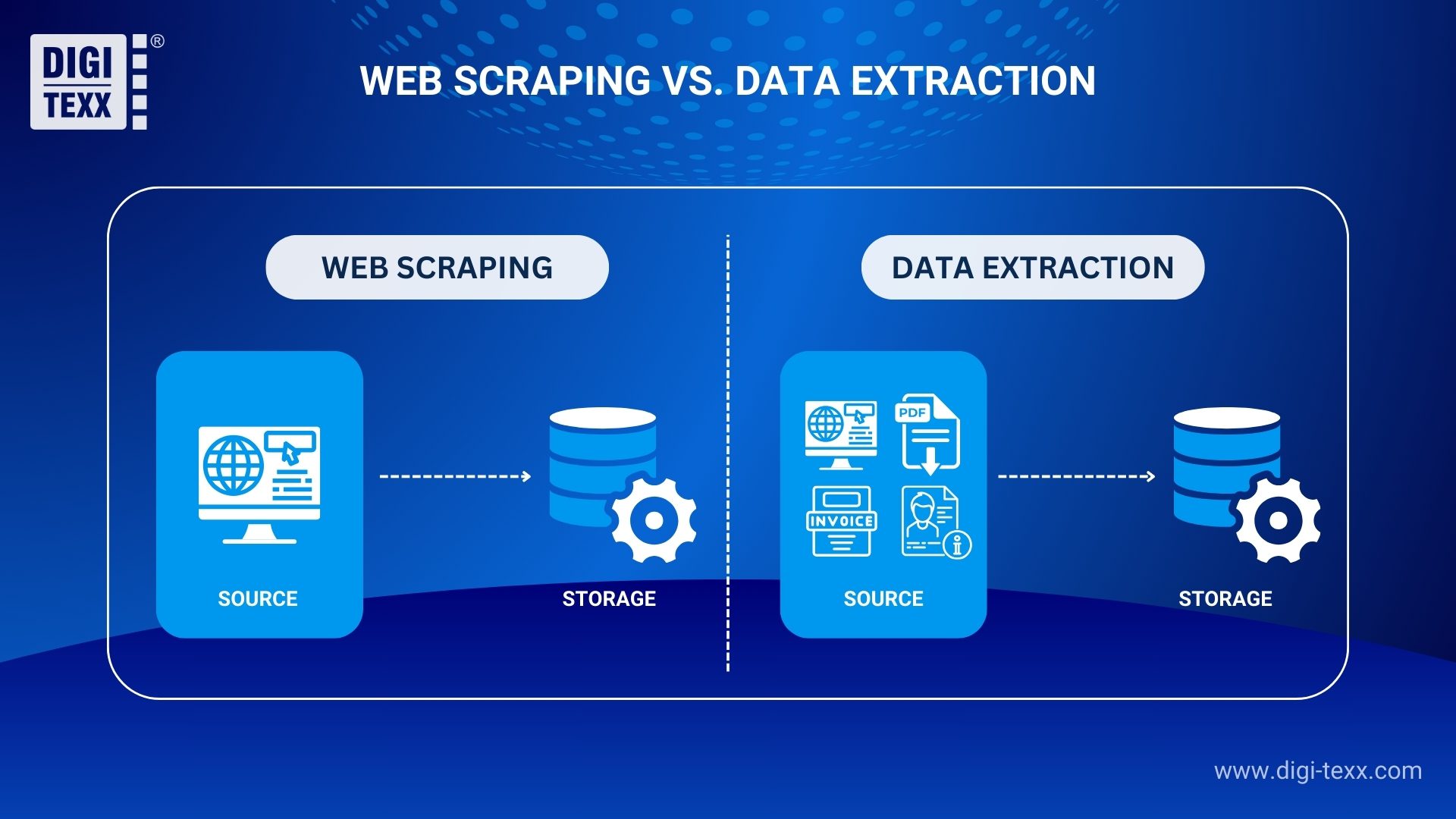

Two powerful techniques – Web Scraping and Data Extraction – help automate this process. While both methods retrieve valuable data, they serve different purposes. Web Scraping focuses on gathering online data from websites, while Data Extraction pulls information from various structured and unstructured sources like PDFs, emails, and databases.

Let’s break down the key differences, advantages, and use cases of Web Scraping vs. Data Extraction, helping you determine which approach best fits your business needs.

What is the Difference Between Web Scraping and Data Extraction?

| Aspect | Web Scraping | Data Extraction |

| Definition | Collect data specifically from websites, often converting unstructured HTML into usable formats. | Retrieve and structure data from diverse sources (e.g., PDFs, databases) for immediate use. |

| Sources | Web-only: HTML pages, APIs, online platforms. | Multi-source: PDFs, databases, emails, ERP systems, websites. |

| Purpose | Gather external web data for insights (e.g., competitor pricing). | Organize internal or mixed data for operations/analytics (e.g., invoice processing). |

| Methodology | Employ crawlers and scrapers to fetch and parse web content (e.g., Scrapy for HTML). | Use OCR, SQL queries, or parsing, tailored to the source (e.g., Tesseract for scans). |

| Output | Unstructured/semi-structured data (e.g., raw text, JSON) needing further processing. | Structured data (e.g., CSV, JSON, tables) ready for integration. |

| Tools | Scrapy, BeautifulSoup, Selenium, Octoparse. | Tesseract, Tabula, Talend, Apache NiFi. |

| Legal Considerations | May face terms-of-service or copyright issues (e.g., scraping without permission). | Often use authorized data, lowering risk (e.g., internal files). |

| Use Case Example | Scrap 1,000 product prices from eBay for analysis. | Extract 500 customer records from a CRM for reporting. |

| Common Points | Data Extraction and Web Scraping both retrieve and process data for analysis, often using automation for efficiency and requiring post-extraction cleaning. Web Scraping targets websites, while Data Extraction spans databases, APIs, and documents. Both tackle issues like access limits and data privacy yet remain vital for market research, finance, and AI/ML. | |

Overview: Data extraction vs. Web Scraping

Definition of Web Scraping:

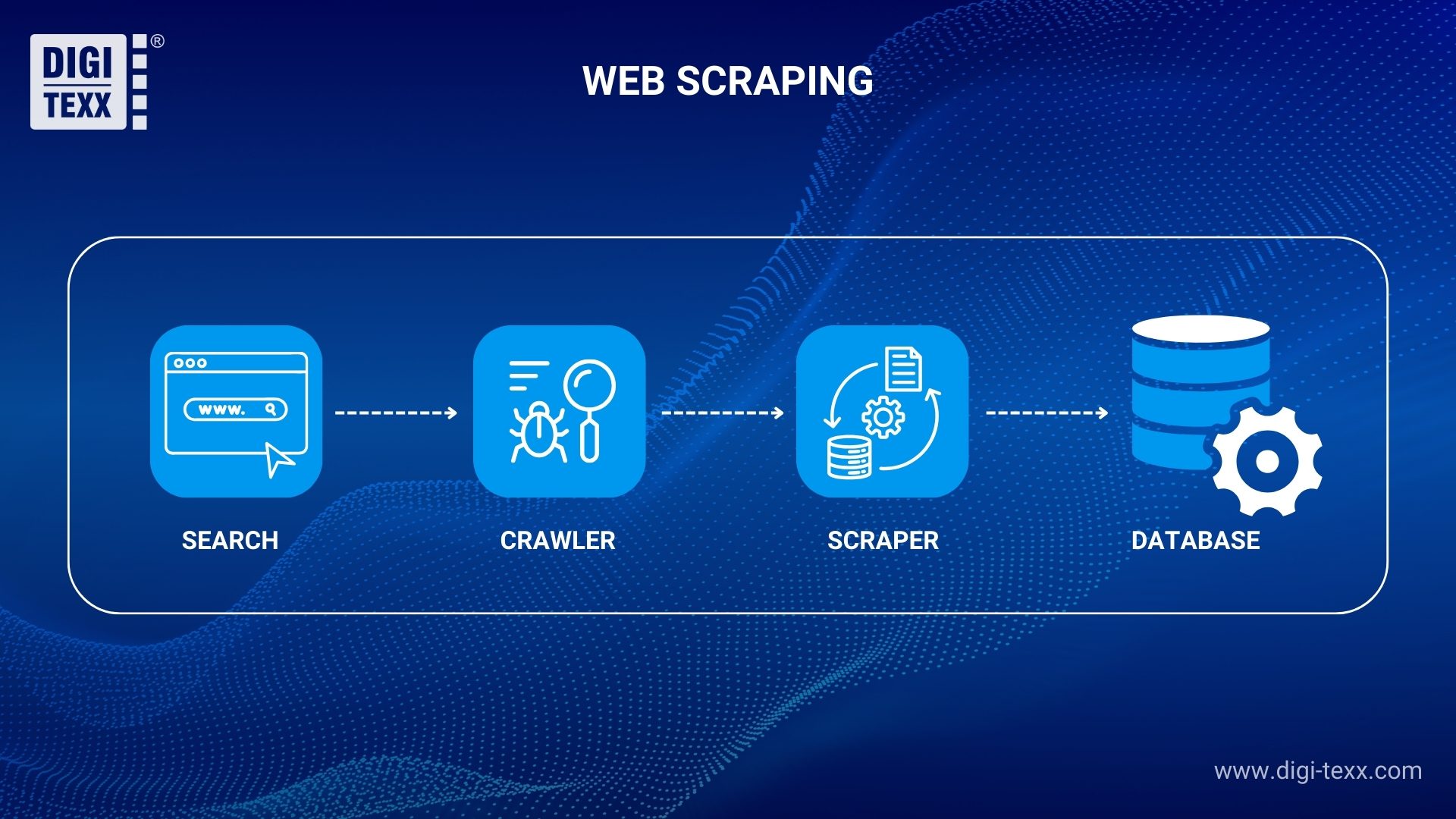

Web Scraping is an automated process that systematically collects data directly from websites, serving as a powerful digital tool for businesses to extract valuable information from the online world. At its core, it involves using software or scripts to access and pull content from HTML pages, the foundation of most websites, or APIs (Application Programming Interfaces), which some sites provide as structured data gateways. Think of it as a virtual assistant that tirelessly navigates the web, grabbing specifics like product prices, customer reviews, or blog titles without the need for manual copy-pasting.

While some major websites provide APIs for easy access to organized data, many do not, making web scraping necessary.

This process relies on two key players:

- A crawler, which systematically navigates the web by following links to find data

- A scraper, which extracts the required information from web pages.

The complexity of the scraper varies based on the task’s difficulty in ensuring efficient and accurate data retrieval.

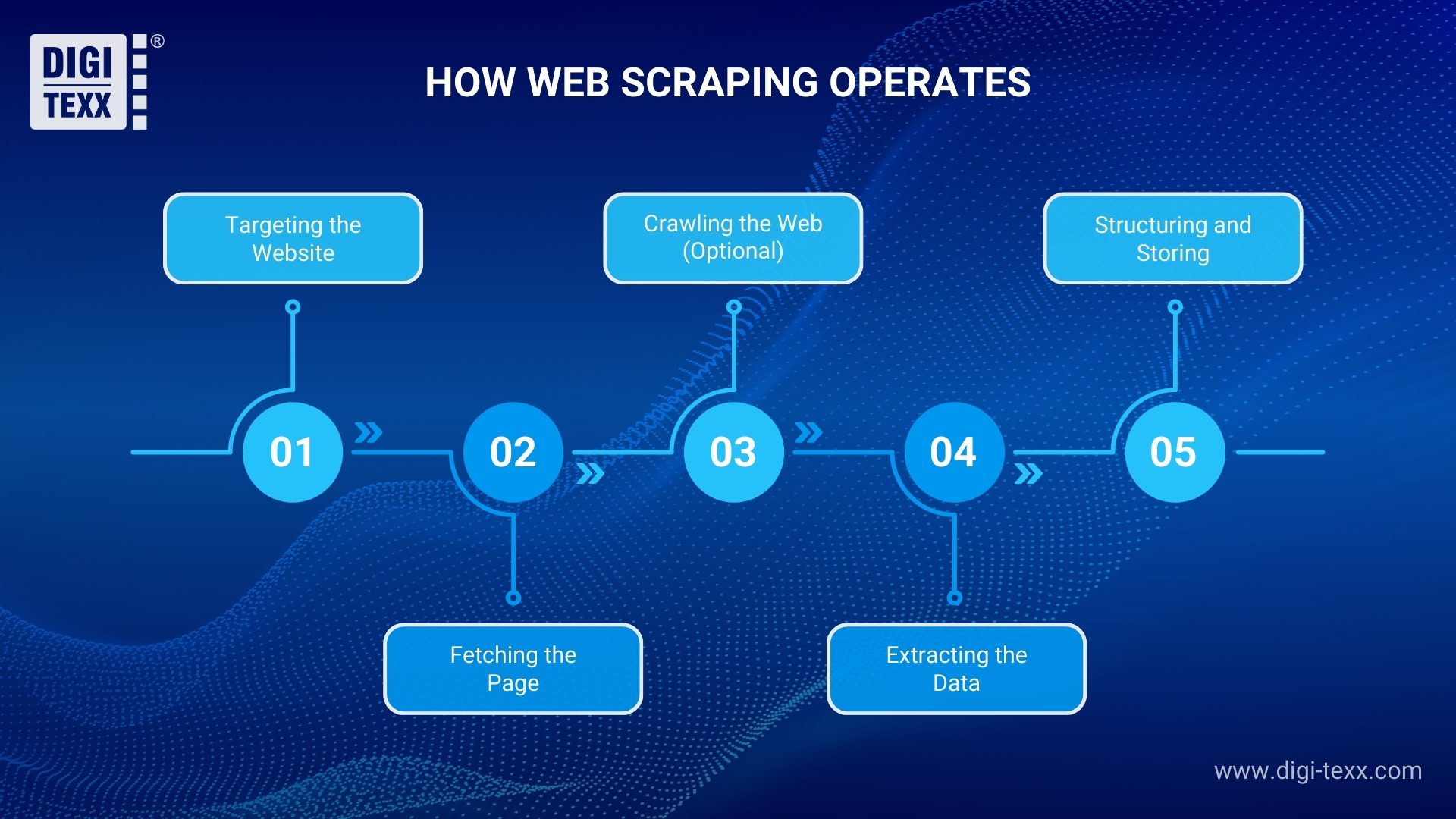

How Web Scraping Operates: A Step-by-Step Breakdown

Web Scraping might seem tricky, but it’s a simple, automated way to pull data from websites revolutionizing how businesses tap online insights like competitor prices or reviews. Grasping its steps can unlock big wins for your company. Here’s how it works.

Step 1: Targeting the Website

The process starts by selecting a website with the data you need. You define your target such as prices or reviews, setting a clear goal for the scrape. It’s like pointing your business radar at the right online source.

Step 2: Fetching the Page

Next, the web scraper sends an HTTP request to retrieve the webpage’s HTML code—the foundational structure behind every site. Tools like requests.get(‘url’) in Python can accomplish this within seconds, enabling fast data extraction without requiring a browser.

Step 3: Crawling the Web (Optional)

For more complex tasks, the web crawler navigates through the website, following links to discover additional pages similar to mapping 1,000 product listings from a single homepage. Tools like Scrapy automate this process, ensuring efficient and comprehensive data collection.

Step 4: Extracting the Data

This is where the real magic happens: the scraper parses the HTML and uses selectors (e.g., XPath or CSS) to extract specific information, such as product prices from <span> tags. BeautifulSoup is ideal for static websites, while Selenium handles dynamic content, transforming raw code into actionable insights.

Step 5: Structuring and Storing

Finally, the raw data is organized into formats like CSV or JSON. This step helps bring the data directly into your business systems for effective exploitation.

Definition of Data Extraction:

Data Extraction is a systematic process that involves identifying, retrieving, and organizing specific data from various sources, transforming it into a structured format that businesses can use right away. Think of it as a master organizer for your company’s data chaos – whether it’s extracting customer records from a database, pulling financial figures from PDF invoices, or capturing key details from emails and ERP systems. Unlike manual data collection, Data Extraction automates the heavy lifting, using tools and techniques tailored to each source to ensure precision and speed.

Its versatility lies in its ability to handle diverse, often unstructured inputs – text, tables, or digital files – and turn them into clean outputs like CSV files or database-ready tables. For businesses, it’s a powerful way to unlock actionable insights from scattered information, streamlining operations and fueling smarter decisions with minimal effort.

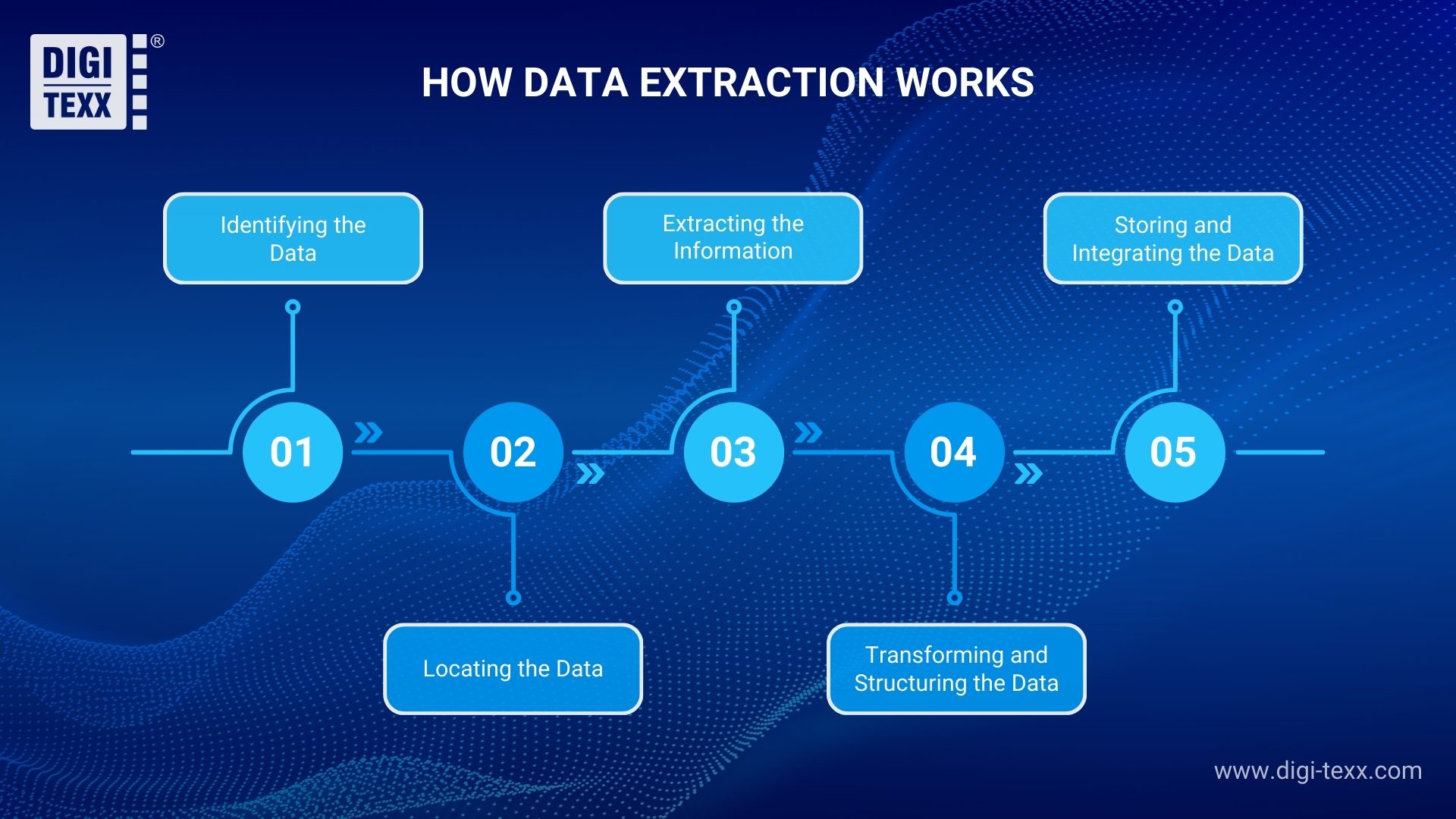

How Data Extraction Works: A Clear Step-by-Step Guide

Data Extraction simplifies turning scattered data into structured insights. Whether it’s invoice details from PDFs or CRM customer data, it saves time and boosts efficiency. Here’s how it works in five quick steps.

Step 1: Identifying the Data Source

The process starts by determining where the data resides such as PDF invoices, CRM databases, or email threads. Businesses define specific targets, like extracting total amounts or customer names, ensuring the focus is on relevant information.

Step 2: Locating the Data

Next, the system pinpoints the required data within these sources. For databases, SQL queries (e.g., SELECT name FROM clients) retrieve information instantly. For PDFs, Optical Character Recognition (OCR) scans text like “Total: $500” to capture all necessary details accurately.

Step 3: Extracting the Information

Specialized tools extract the data efficiently. For example:

- Tabula captures tables from PDFs: Extracts a 500-row table from a PDF report into CSV in minutes.

- Tesseract processes scanned text: Turns 1,000 scanned obituaries into text for a database.

- Pandas reads CSV files: It reads a 2,000-row CSV to filter customer trends fast.

- Customized solutions like DIGI-XTRACT to extract data from various sources: Pulls 450,000 obituary records from 60 URLs with 95% accuracy.

The automated solutions enable users to handle hundreds of records in minutes with minimal manual effort.

Step 4: Transforming and Structuring the Data

Raw data is cleaned and standardized based on each organization’s requirements. Common data transforming and structuring cases:

- Format Date & Time: 01/01/2025

- Remove duplicates

- Data conversion: transform the data file format to other formats.

- Product data classification and label

- The data are structured into formats like CSV or JSON.

Transforming and structuring raw data ensures that the extracted information is ready for business applications.

Step 5: Storing and Integrating the Data

Finally, the structured data is saved in Excel, databases, or cloud storage and integrated with tools like QuickBooks, Tableau, or DIGI-DMS.

Storing and integrating the data properly ensures seamless access for analysis and decision-making.

Web Scraping vs Data Extraction: Advantages and Disadvantages

| Aspect | Web Scraping | Data Extraction |

| Advantage | – Access to Vast Online Data- Automation & Scalability -No Direct Database Access Needed | – Structured & Reliable Data – Legal & Compliance-Friendly.- Supports Multiple Formats |

| Challenges | – Legal & Ethical Risks – Website Structure Changes- Resource-Intensive – Anti-Scraping Blocks:- Unstructured Data | – Manual Setup for Different File Types- Not Ideal for Real-Time Data – Technical Complexity |

| Best for | External market intelligence, such as Competitive analysis, market research, web-only data needs. | Internal business data processing, multi-source structuring, and analytics prep. |

Web Scraping: Advantages and Disadvantages

Advantages

1. Access to Vast Online Data:

Web Scraping lets you grab real-time data – like prices or trends – from websites instantly. It’s like having a live feed of what’s happening online, perfect for market research or tracking competitors.

2. Automation & Scalability:

It processes tons of web data fast, with almost no human effort, and scales up as your needs grow. Think of it as a tireless worker, pulling insights overnight so you can act by morning – ideal for big projects without the big workload.

3. No Direct Database Access Needed:

You can collect public web data – like contacts or updates – without needing special access to a site’s backend. It’s as easy as picking fruit from a tree, letting you build lead lists or gather news quickly, with no permissions required.

Challenges

1. Legal & Ethical Risks

Scraping can get you into trouble if it breaks website rules or laws. Websites often say “no scraping” in their terms, and ignoring that could lead to lawsuits or bans. For example, scraping personal data from a site might clash with privacy laws like GDPR, putting your business at risk.

2. Website Structure Changes

When a website updates its design, your scraper might stop working. Think of it as a technology that’s suddenly outdated. If your target website changes its product page layout, your tool might miss the prices because it’s looking in the wrong spot. This means you’ll need to fix your scraper often, which can be a hassle.

3. Resource-Intensive

Scraping big websites takes a lot of computer power and time. It’s like running a marathon – your tools need energy. Scraping 1,000 pages might slow your system or rack up costs for server power. Small businesses might find it tough to keep up without the right setup.

4. Anti-Scraping Blocks

Websites use tricks to stop scrapers, like CAPTCHAs or IP bans. Picture a “No Entry” sign popping up mid-job. Sites might show a “prove you’re human” test or block your connection if you scrape too much. This slows you down, and dodging it – like using proxies – adds extra work.

5. Unstructured Data

The data you get is often messy and hard to use right away. Scraping 500 reviews might give you raw text that needs sorting into neat columns – like names and ratings. This extra step can delay your plans if you’re not ready to clean it up.

Data Extraction: Advantages and Disadvantages

Advantage

1. Structured & Reliable Data

Data Extraction gives you neat, trustworthy data ready to use. It pulls info from unorganized sources and organizes it into clean formats like spreadsheets. This means your business can trust the data for reports or decisions without second-guessing.

2. Legal & Compliance-Friendly

It’s safer because it often uses your data, not someone else’s. Since Data Extraction works with stuff you already own – like CRM records or company PDFs – it sidesteps legal headaches, keeping you in line with rules like privacy laws easily.

3. Supports Multiple Formats

It handles data from all kinds of places, such as PDFs, emails, and databases, e.g., Whether it’s pulling customer names from emails or sales stats from a database, Data Extraction adapts, giving your business flexibility to use data from anywhere in a way that fits your needs.

Challenges

1. Manual Setup for Different File Types

You have to set it up by hand for each kind of file – like PDFs or emails, even websites. Extracting from a spreadsheet needs one tool, but a scanned PDF needs another, like OCR. Setting this up for 500 different files takes time and effort upfront.

2. Not Ideal for Real-Time Data

It’s not great for grabbing live, up-to-the-minute info. Imagine wanting the latest weather but getting yesterday’s forecast. Data Extraction shines with static data, like old invoices, but if you need stock prices ticking by the second, it’s too slow compared to web tools.

3. Technical Complexity

It can be tricky to use because of the tech involved. Tools like Talend or Tesseract need some know-how; figuring out SQL or OCR settings isn’t simple. For small teams without tech skills, this can feel like a big hill to climb.

Top Data Extraction and Web Scraping Tools for Your Business

5 Key Web Scraping Tools and Their Features

| Tool | Origin | Key Features | Best for |

| Scrapy | Open-source Python framework (USA/International). | Crawl multiple pages simultaneously with high-speed efficiency. Extract data using XPath or CSS selectors (e.g., product prices from <div> tags). Export to structured formats like CSV, JSON, or databases. Handle large-scale scraping with custom pipelines. | Developers needing scalable, code-driven web scraping (e.g., scraping 10,000 pages for market analysis). |

| Selenium | Open-source automation tool (USA). | Automate browser actions (e.g., clicks, scrolls) for dynamic, JavaScript-heavy sites. Support multiple browsers (Chrome, Firefox) for real-user simulation. Extract data from interactive pages (e.g., infinite scrolls). Customizable with Python or other languages. | Dynamic web content (e.g., scraping flight prices from 100 travel sites). |

| Octoparse | Commercial tool (USA/China). | No-code, point-and-click interface for non-technical users. Handle dynamic sites with AJAX or logins via cloud scraping. Export data to Excel, CSV, or APIs with scheduling options. Offer proxy support to avoid blocks. | Businesses wanting easy, automated scraping (e.g., 500 product listings weekly). |

| Historical Data Web Scraping Solution | Crafted by DIGI-TEXX (Vietnam-based, global reach) using ML and NLP tech. | Crawl archives, newspapers, and sites (schools, churches) fast. Extract name, death date, images, etc., with 95% accuracy. Handle PDFs, HTML; outputs 450,000 records/URL. Consolidate unique records in 20-30 mins (text) or 2-3 days (complex). | All large and small companies need accurate and extensive data for databases and AI. |

5 Key Data Extraction Tools and Their Features

| Tool | Origin | Key Features | Best for |

| DIGI-XTRACT | Built by DIGI-TEXX VIETNAM | Use Optical Character Recognition (OCR) to extract text from images, PDFs, and scans. Support over 30+ languages for global applicability. Integrate with Python via Pytesseract for custom workflows. Process unstructured data into plain text output. | Digitize scanned documents (e.g., extracting text from 1,000 invoices in minutes) |

| Tabula | Open-source tool (USA). | Extract tables from PDFs into CSV or Excel formats with precision. It offers a simple interface for selecting tables manually or automatically. Handle multi-page PDFs seamlessly. No coding is required, making it accessible to all | Structure PDF reports (e.g., pulling financial data from 50 pages fast). |

| Apache NiFi | Open-source, Apache Software Foundation (USA). | Automate real-time data flows from APIs, databases, and files. Drag-and-drop interface for building extraction pipelines. Transform data on the fly (e.g., JSON to CSV).Scale to process millions of records effortlessly. | Multi-source data integration (e.g., syncing 2M sales records daily). |

| Talend Data Integration | Commercial platform (USA/France). | Connect to databases, cloud apps, and files via pre-built connectors. Provide ETL (Extract, Transform, Load) for cleaning and structuring data. Support batch and real-time extraction. Integrate with warehouses like Snowflake or Redshift. | Enterprise-grade workflows (e.g., syncing 500K CRM records). |

| ABBYY FineReader | Commercial software (Russia/USA). | High-accuracy OCR for extracting text and tables from PDFs/scans. Preserve formatting in outputs like Word or Excel. Batch-process multiple documents at once. Recognize 190+ languages with top precision. | Processing complex documents (e.g., 2,000 forms with 99% accuracy). |

Essential Considerations for Selecting Data Extraction vs. Web Scraping

| Web Scraping | Data Extraction | |

| 1. Source of the Data | Opt for this if your data is on websites – like competitor pricing or social media posts (e.g., scraping 1,000 product pages from Amazon) | Choose this for diverse sources beyond the web, such as PDFs, databases, or emails (e.g., pulling 500 invoice details from files). |

| 2. Data Structure Requirements | It works best when you can handle unstructured or semi-structured data (e.g., HTML text) and refine it later. | Ideal if you need structured data (e.g., CSV, tables) ready for immediate use in systems or analytics |

| 3. Purpose and Business Goal | Perfect for external insights – think real-time market trends or competitive analysis (e.g., scraping X for campaign ideas). | Suite for internal optimization or multi-source structuring (e.g., organizing sales data for reports). |

| 4. Legal and Ethical Boundaries | Riskier due to potential violations of website terms or copyright laws; ensure compliance to avoid issues. | Safer with owned or authorized data (e.g., your CRM), reducing legal concerns. |

| 5. Technical Expertise and Tools | Require web-focused tools like Scrapy or Selenium – coding skills help, though no-code options like Octoparse exist. | Use varied tools (e.g., Tesseract, Talend) based on the source; may need broader technical know-how but can be simpler for structured data. |

Typical Applications of Data Extraction and Web Scraping

Both web scraping and data extraction play a crucial role in businesses’ gathering and processing of data. Below are the most common use cases for each method.

Common Use Cases for Web Scraping

1. Market Research: Gain a Competitive Advantage

Businesses leverage Web Scraping to extract real-time data on products, pricing, and customer reviews from e-commerce platforms. This allows companies to analyze market trends, compare competitors, and make strategic pricing or product development decisions backed by accurate data.

2. Data Analysis: Make Smarter Business Predictions

Web Scraping collects massive datasets for analyzing trends, forecasting sales, and understanding consumer habits. This lets your business spot industry changes early, making sharp, evidence-based predictions to fuel growth and stay proactive.

3. Information Monitoring: Lead the Market

Organizations can use Web Scraping to track and update information from multiple sources automatically. For example, financial institutions monitor news websites and market data in real time to make fast, informed investment decisions, ensuring they stay ahead of competitors.

4. Content Creation: Deliver Valuable Insights

Web Scraping enables content creators to collect and compile information from diverse sources, allowing them to produce high-quality, well-researched articles, reports, and blog posts. This not only enhances reader engagement but also boosts SEO rankings by providing valuable, up-to-date content.

5. Sentiment Analysis: Understand Customer Perceptions

Businesses and researchers use Web Scraping to gather discussions from forums, blogs, and social media to analyze public sentiment about products or services. This insight helps you tweak marketing, enhance offerings, and align messaging with what customers really think.

Common Use Cases for Data Extraction

1. Business Intelligence & Analytics: Make Data-Driven Decisions

Extracting data from sources like databases, CRM systems, or online reports allows businesses to generate real-time analytics and make well-informed decisions. Companies use this data to identify trends, optimize strategies, and improve overall performance.

2. Market Research & Competitive Analysis: Gain a Competitive Edge

Businesses use data extraction to collect information about competitors, market trends, and customer behavior. By analyzing data from websites, surveys, and social media, companies can refine their products, pricing strategies, and marketing campaigns to stay ahead of the competition.

3. Customer Insights & Personalization: Enhance Personalization

By extracting data from CRM systems, customer feedback forms, and support tickets, businesses can gain a deeper understanding of customer needs. This allows for personalized marketing, better customer service, and improved product recommendations.

4. Financial & Risk Management: Improve Decision-Making

Financial institutions rely on data extraction to gather real-time data from financial reports, stock markets, and regulatory documents. This helps them assess risks, detect fraud, and make data-driven investment decisions.

5. Automated Data Entry & Workflow Optimization: Boost Efficiency & Accuracy

Data extraction eliminates manual data entry, reducing errors and saving time. Businesses can extract and structure data from invoices, contracts, and other documents to automate processes like billing, payroll, and compliance reporting.

6. Sentiment Analysis & Social Listening: Understand Customer Perceptions

Organizations extract data from social media platforms, customer reviews, and online forums to understand public sentiment about their brand, products, or industry. This information helps improve marketing strategies and enhance customer engagement.

7. Healthcare & Medical Research: Enhance Patient Care

Healthcare providers extract patient records, clinical trial data, and medical reports to enhance diagnostic accuracy, patient care, and drug research. AI-driven data extraction helps identify patterns in diseases and medical treatments more quickly and accurately.

Web Scraping vs Data Extraction: Choosing the Right Approach for Your Data Needs

Both Data Extraction and Web Scraping are essential techniques for retrieving and processing data to support business intelligence and decision-making. These methods are often automated to enhance efficiency, reduce manual effort, and ensure large-scale data collection. However, raw data typically requires cleaning, structuring, and formatting before it becomes useful for analysis.

Web Scraping focuses on extracting information from websites, including product details, customer reviews, and market trends. In contrast, Data Extraction covers a wider spectrum, pulling data from structured sources like databases, APIs, and documents such as PDFs or spreadsheets. Despite their benefits, both methods face challenges such as website restrictions, legal compliance, and data security concerns.

Industries such as market research, finance, artificial intelligence, and machine learning heavily rely on these techniques to gather insights, track trends, and optimize business strategies. Choosing the right approach depends on the data source and business objectives.