Data cleansing in SQL becomes the most valuable task in achieving high-quality datasets to support the business by cleaning dirty data from its raw and disparate forms. As more data environments become sophisticated, next-gen teams step away from clumsy SQL syntax for the automation enabled through AI and real-time processing with advanced anomaly detection. These modern practices not only reduce the amount of manual labor required but also improve data integrity and compliance.

Discover how possessing the mastery of modern SQL cleaning techniques can make your analytics process efficient and offer your company a competitive edge. Read the full article on DIGI-TEXX to gain practical insights and stay ahead of your data plan.

=> You might like: How to Clean Data in Python: Step by Step

What is SQL data cleaning?

Data cleansing in SQL is the activity of locating and fixing data imperfections like mistakes, inconsistencies, and irregularities in a data set through SQL queries and methods. It is required for data cleansing that will lead to quality and useful analysis.

Data cleansing in SQL is the activity of locating and fixing data imperfections.

Common Data Issues in SQL Databases

Through SQL-based practices, teams can fix several common data quality issues:

- Missing data occurs when there are missing values in some columns, leading to incomplete knowledge.

- Inaccurate data are wrong, invalid, or untrue to fact values.

- Duplicate data are duplicate records that can bias results if left uncorrected.

- Inconsistent data format, naming, or logical structure conflicts between records.

- Outliers are outlier values that lie far beyond the rest of the dataset and can bias analysis.

Data cleansing in SQL skills assists companies in creating credible datasets that facilitate effective decision-making and reporting.

Several common data quality issues when cleansing data

8 Essential Steps for Data Cleansing in SQL

The specific SQL data cleansing in the SQL method will vary based on the makeup and intention of your dataset. However, the general process will generally follow the same model, independent of industry or use. To aid in helping you cleanse your data correctly, the following is an eight-step data cleansing process that data professionals often use:

Filter Out Irrelevant Data

It is strictly dependent on the context and purpose of the dataset to determine what information is extraneous. Analysts must identify what records are a direct match with the analysis purpose and what records might skew the results.

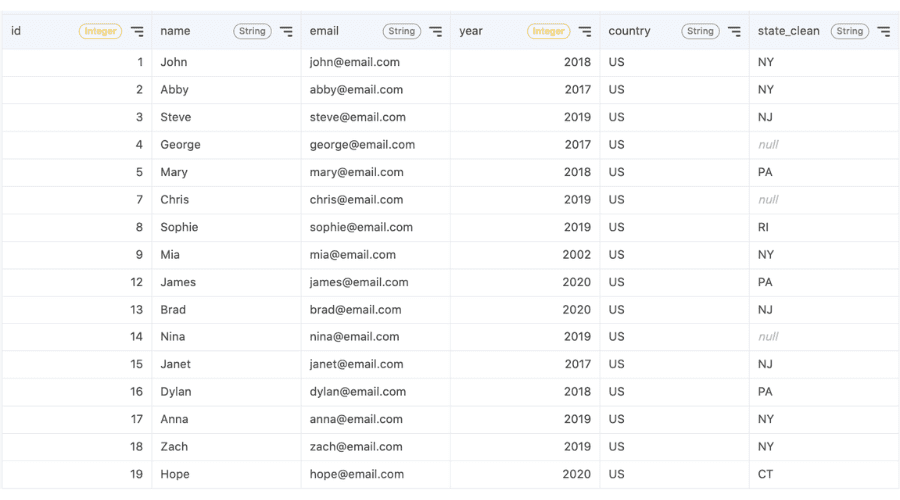

For instance, if one considers only those customers who are from the United States, all the records of customers from other countries would introduce noise in the findings. In data cleansing in SQL, such records would be excluded in order to make the results accurate.

It is strictly dependent on the context to determine what information is extraneous

This is done through using a filtering query like:

SELECT * FROM customers WHERE country = ‘US’;

This SQL query removes all the rows except where the country of the customer is “US,” placing the dataset within the scope of analysis specified.

Remove Duplicates

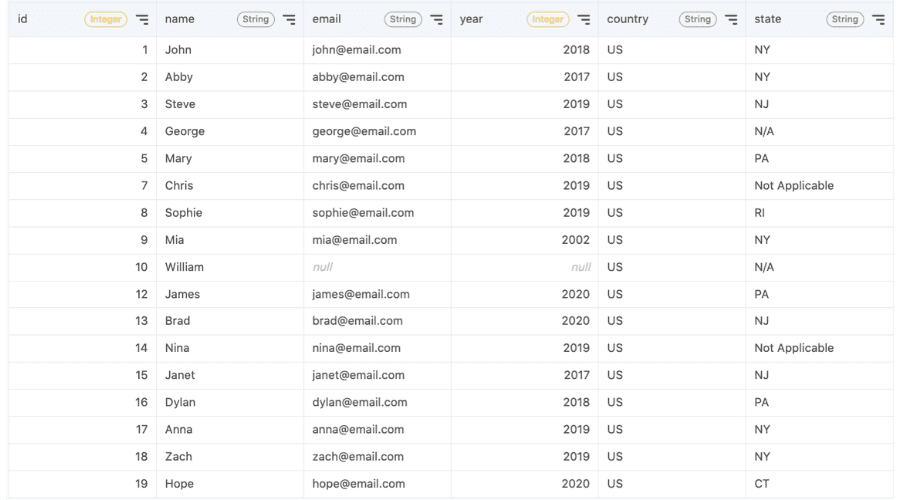

Duplicate rows often exist in datasets when the data is web-scraped, survey information, or compiled from different databases. These duplicate rows not only take up unnecessary space, but they also bias results by counting particular data points more than they should be counted.

As part of the data cleansing in SQL process, deleting duplicate rows and marking them as such is required in order to preserve the integrity of the analysis. Consider that there are multiple identical records for a customer named Abby with the same ID. There’s a need to keep only one valid record.

Duplicate rows often exist in datasets when the data is web-scraped

This can be achieved by running a query such as:

SELECT DISTINCT * FROM customers;

By using this command, only unique rows are extracted, and a grid-form and proportionate dataset is left for correct analysis at one’s disposal.

Fix Structural Errors

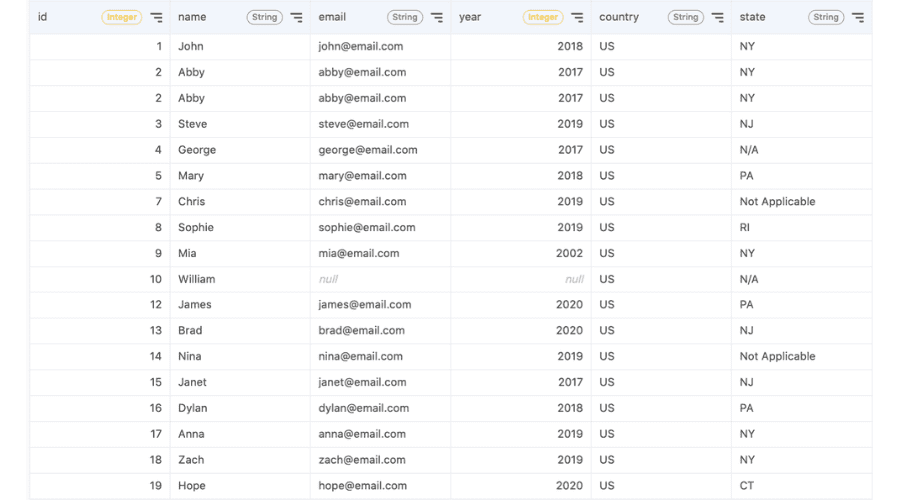

Structural errors tend to be made during input, migration, or measurement and are most commonly in the form of inconsistent naming, spelling errors, or irregular capitalization. These errors can result in ambiguity or misclassification in the dataset if not properly addressed.

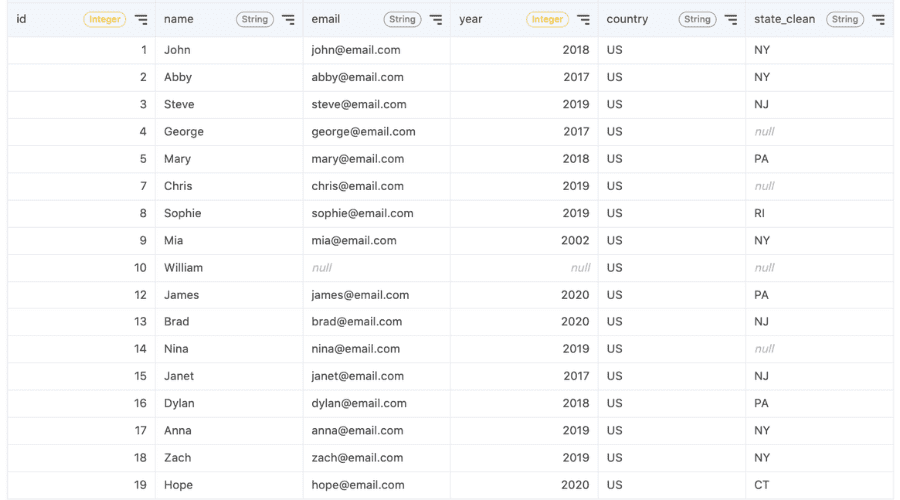

For instance, in a “state” column of a data table, both “N/A” and “Not Applicable” may be present even though they denote the same state. For the sake of consistency, these values need to be normalized.

Structural errors tend to be made during input, migration, or measurement

During data cleansing in SQL, a transformation can be executed to make such records normal. The following is executed to create a new column called state_clean where “N/A” and “Not Applicable” are normalized to NULL:

SELECT id, name, email, year, country,

IF(state IN (‘Not Applicable’, ‘N/A’), NULL, state) AS state_clean

FROM customers;

Certain structural problems are as follows:

- Extraneous whitespace: Excess spaces at the beginning or end of a string—for example, ” Apple ” instead of “Apple”—can cause improper filtering or aggregation. TRIM(), LTRIM(), or RTRIM() functions are used to remove these whitespaces.

- Incorrect capitalization: Unpredictable capitalization of letters, e.g., “apple” as a company name, can be translated into proper form. INITCAP() capitalizes the first letter of each word, whereas UPPER() and LOWER() uppercase or lowercase the whole string, respectively.

- In the case where certain words or phrases need to be corrected, the REPLACE() function will be useful. For example, a replacement of “Apple Company” with “Apple Inc” is done by replacing “Company” with “Inc”

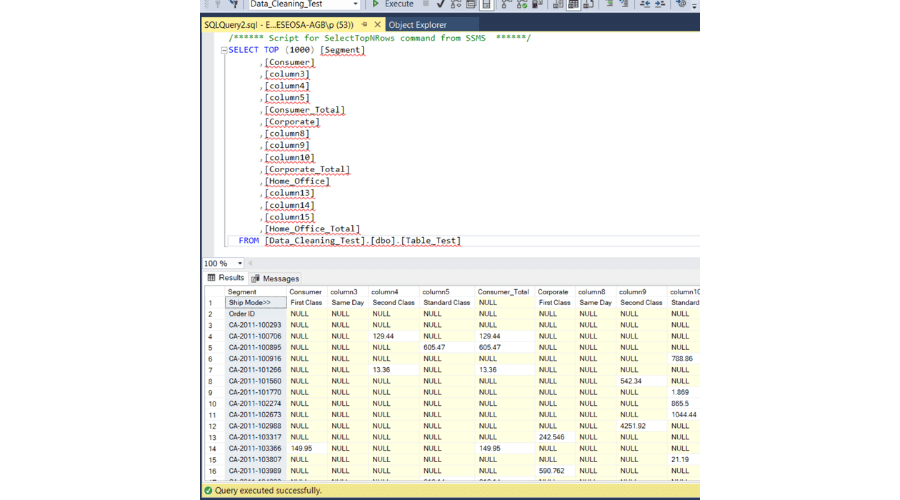

Convert Data Types

Maintaining uniform data types is a part of the data cleaning process in SQL, particularly while dealing with those fields that have been incorrectly classified while importing or collecting data. Numerical fields are likely to be saved as text, which does not support proper computation or analysis.

- There are multiple type-related problems in another dataset that can be overcome by proper conversions, i.e.,

- The zip code field will lose its leading zeros when it’s stored incorrectly as a number instead of a string

- The registered_at field can be in the format of a UNIX timestamp, and hence is user-unreadable

- The revenue field can include the dollar sign character, so it will get treated as a string and not a number for calculations

Maintaining uniform data types is a part of the data cleaning process in SQL

The following SQL query fixes these problems:

SELECT customer,

LPAD(CAST(zipcode AS STRING), 5, “0”) AS zipcode,

TIMESTAMP_SECONDS(registered_at) AS registered_at,

CAST(REPLACE(revenue, “$”, “”) AS INT64) AS revenue

FROM customers_zipcode;

This query converts zipcode to a correct string, converts registered_at to an editable date/timestamp, and removes the dollar sign from revenue before casting it into a numeric type. Corrections of types like these are necessary while generating tidy, workable datasets for downstream processing.

Handle Missing Values

At other times, part of the more difficult aspect of data cleansing in SQL is dealing with missing values because most analytics tools and algorithms can no longer work with incomplete data. Handling missing values will depend on their cause, frequency, and patterning within the data set.

One of the popular methods is deleting records with null values. Simple as that, it will decrease the dataset size and possibly representativeness as well. Exercise care, particularly when there is sparsity or missing values have a pattern in that, if deleted, it may cause bias.

To explore more ways to handle missing or inconsistent data, check out our list of top data cleansing tools.

Part of the more difficult aspect of data cleansing in SQL is dealing with missing values.

Another approach is imputation—replacing the missing data with surrogates. In categorical fields, missing data can simply be marked as “missing.” Missing data in numeric fields can be substituted with summary statistics like the mean or median. This approach does make assumptions that can change the basic distribution of the data, particularly where large portions are missing.

Suppose there is a data set with a missing value in the “year” column. If the intention is to study customer acquisition patterns over the years, the lack of a year compromises the analysis. In this case, if there is no good reason to estimate, the best thing to do might be to omit that record with a query like:

SELECT * FROM customers WHERE year IS NOT NULL;

Another SQL data cleansing technique to treat missing values by replacing them with a pre-determined constant. This approach is normally adopted where the lack of information is tantamount to a zero or neutral value and does not influence the analysis.

For instance, if there are NULL values in the sales column, these can be replaced with 0 in order to be consistent throughout the dataset. This can be illustrated using the next SQL query:

SELECT account_name, updated_time, IFNULL(sales, 0) AS sales

FROM sales_performance;

Detect and Manage Outliers

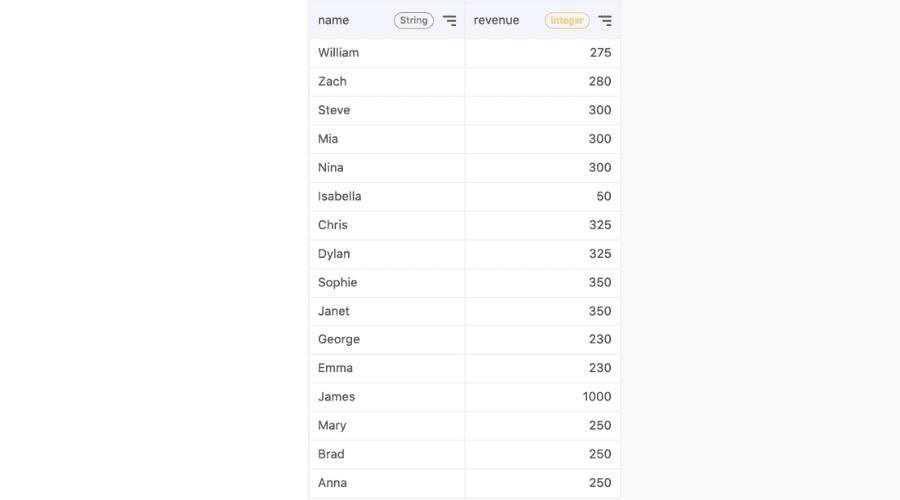

In many datasets, there are isolated values that differ significantly from the rest—these are known as outliers. Deciding how to handle them is a critical step in data cleansing in SQL. If an outlier stems from a data entry error, it may be appropriate to remove or correct the value. However, when the outlier is a legitimate observation, it requires more careful judgment.

In some analytical scenarios, valid outliers that are irrelevant to the goal of the analysis can be excluded. In others, they may carry important information and should be retained. The key lies in understanding the source and context of the outlier.

In a dataset containing revenue figures for individual customers, statistical methods like the interquartile range (IQR) rule can help detect unusual values. Outliers are generally defined as values that fall below the first quartile minus 1.5 times the IQR, or above the third quartile plus the same amount.

Statistical methods like the interquartile range (IQR) rule can help detect unusual values

The following SQL query illustrates how to identify such outliers and replace them with the average of the remaining revenue values:

WITH

quantiles AS (

SELECT *,

APPROX_QUANTILES(revenue, 4)[OFFSET (1)] AS percentile_25,

APPROX_QUANTILES(revenue, 4)[OFFSET (3)] AS percentile_75

FROM revenues

),

iqr AS (

SELECT *,

1.5 * (percentile_75 – percentile_25) AS iqr_value

FROM quantiles

),

outliers AS (

SELECT name,

IF(revenue < (percentile_25 – iqr_value)

OR revenue > (percentile_75 + iqr_value), NULL, revenue)

AS revenue_null

FROM iqr, revenues

)

SELECT name,

IFNULL(revenue_null, AVG(revenue_null) OVER()) AS clean_revenue

FROM outliers;

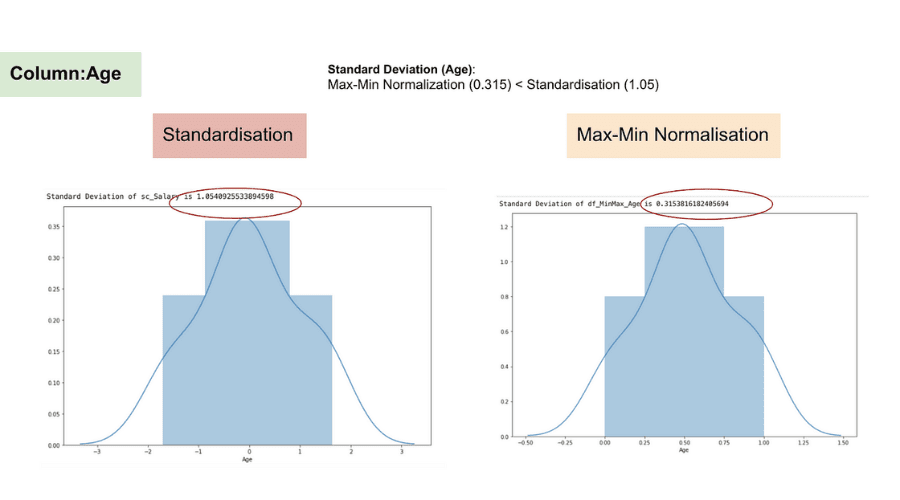

Standardize Formats

Standardization of values is an essential process in data cleansing in SQL to provide value consistency in the dataset. It is particularly critical when data comes from different systems, sources, or structures. Standardization may involve combining units of measurement or rescaling value scales to allow comparison on a common basis.

Standardization of values is an essential process in data cleansing in SQL

For instance, readings in temperatures can be in Fahrenheit in one measurement system and Celsius in another. To standardize the data, Fahrenheit readings can be translated into Celsius through the following SQL statement:

SELECT fahrenheit,

(Fahrenheit – 32) * (5 / 9) AS Celsius

FROM temperatures;

In another instance, scores rated on a scale of 5 can be scaled to a measure of 100 for comparison or reporting. Scaling is achieved via simple multiplication:

SELECT points_5,

points_5 * 20 AS points_100

FROM scores;

Validate Final Output

The last step in data cleansing in SQL is to check the dataset to confirm that it is up to the quality and reliability level required. The validation will ensure that the data is complete, consistent, accurate, and well-formatted before being analyzed.

It is time to determine if the data set is providing enough information, if all values are following their presumed constraints, and if the cleaned-up data affirms or refutes the original hypotheses. Analysts should also determine if the data logically corresponds to the real-world situation in which the data is given.

The last step in data cleansing in SQL is to check the dataset

=> See more: How to Clean Up Your Data for Better Insights

Best Practices for Data Cleansing in SQL

Successful data cleansing in SQL is dependent upon best practices to be accurate and efficient across different datasets. The essential guidelines adopted by seasoned data experts are as follows:

- Achieve a complete understanding of the data through stringent profiling and mapping to business needs

- Maintain complete documentation of cleaning activities, transformation rules, and change history for auditability

- Use subsets of samples to test SQL queries initially, before using them on the whole dataset, in case surprises arise

- Always store the original data in advance to carry out critical transformations so that it can be safely restored when required.

- Use transactional processing with BEGIN and COMMIT to make all changes atomically.

- Optimize query performance by performing proper indexing and reviewing execution plans to prevent bottlenecks.

- Ensure long-term data quality with governance policies, periodic validation, and automated monitoring mechanisms.

- Employ best practices like SQL cleaning script version control, define measurable data quality KPIs with alerts, and develop reusable functions for consistency across teams and projects.

=> Want a more efficient way to clean data in SQL? Our data cleansing services might be just what you need.

Successful data cleansing in SQL is dependent upon best practices to be accurate.

There is no single method for data cleansing in SQL since every dataset has its own issues. Nevertheless, following a sensible eight-step process, there is a sound foundation for handling typical errors and using the required remedies.

For enterprise-class data practices dedicated businesses, DIGI TEXX provides full data operation, automation, and digital transformation expertise. From successful experience managing high-volume data, DIGI TEXX assists businesses in developing strong, clean data sets that drive better decisions and lasting growth.

=> Read more: